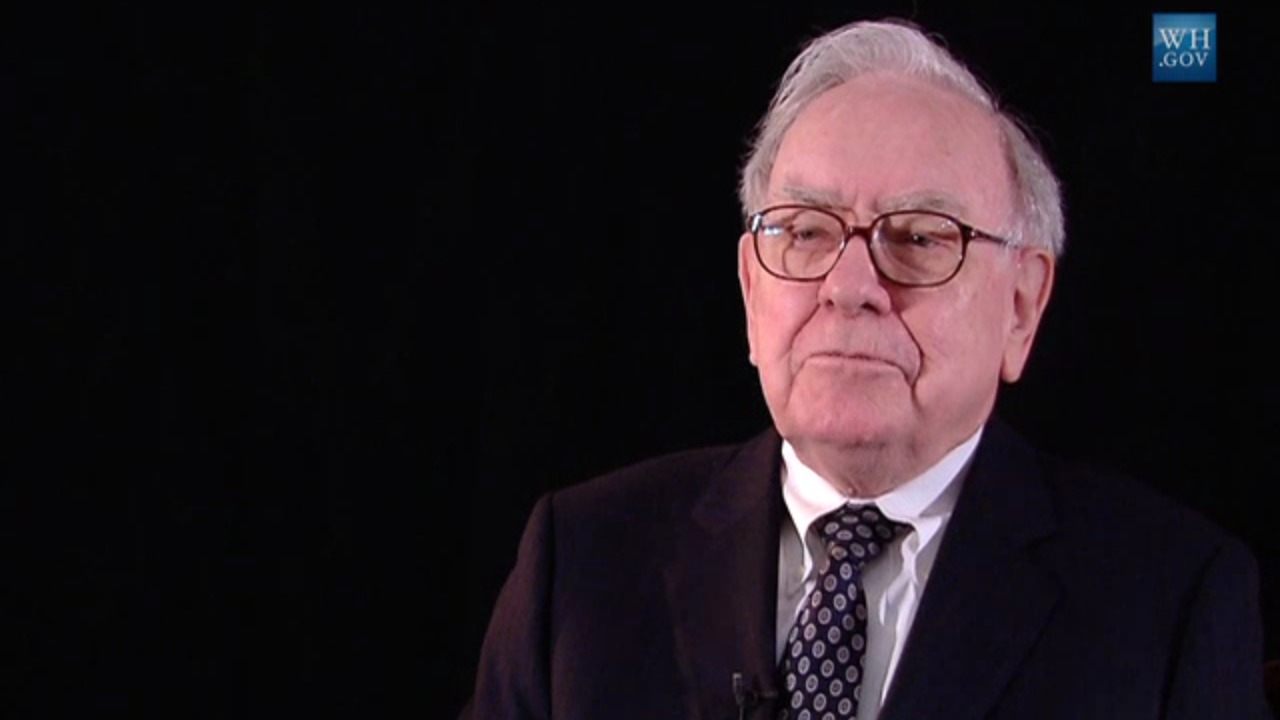

Warren Buffett has spent a lifetime warning investors away from fads and toward patient, rational decision making. When he says artificial intelligence carries dangers on the scale of nuclear weapons, he is not talking about science fiction, he is flagging a real-world risk that he believes markets and policymakers are still underpricing. His description of AI as a genie that cannot be forced back into the bottle captures both the inevitability of the technology and the difficulty of containing its worst uses.

Buffett’s alarm is not a rejection of innovation, it is a judgment about power. Nuclear weapons changed geopolitics by giving a small number of actors the ability to cause catastrophic harm at unprecedented speed. In his view, advanced AI systems are beginning to concentrate a similar kind of leverage in the hands of whoever controls the models, the data, and the compute, and that should worry anyone who cares about financial stability, national security, or basic trust in information.

Why Buffett sees “enormous potential for harm” in AI

When Warren Buffett talks about risk, he usually speaks in probabilities and trade-offs, not in absolutes. That is what makes his description of artificial intelligence as having “enormous potential for harm” so striking. At a recent gathering of Berkshire Hathaway shareholders, he framed AI as a transformative force that could reshape how people work with money and in life, but he also stressed that the same capabilities that make it powerful for productivity can make it powerful for abuse, a tension he has highlighted in detailed remarks on the technology.

Buffett has never been quick to embrace new tech, yet he is not dismissing AI as a passing craze. Instead, he is warning that the speed and scale at which AI can operate multiplies traditional risks. In earlier comments, he likened the technology to a genie that, once released, cannot be put back, underscoring that the world will have to live with the consequences of systems that can generate convincing text, images, and decisions at industrial scale, a comparison he sharpened when he said AI’s danger rivals that of nuclear weapons in separate analysis of his views.

The nuclear weapons analogy and the “genie” problem

Buffett’s nuclear analogy is not casual. He has described the invention of nuclear weapons as a moment when humanity created a tool so destructive that its mere existence changed behavior, even when it was never used. In his view, advanced AI is “somewhat similar,” a technology that, once developed, will be pursued by multiple actors regardless of regulation or ethics, because the strategic and economic incentives are too strong, a point he has made while explaining that he sees AI as a genie already “part the way out of the bottle” in televised comments.

That metaphor matters because it shifts the conversation from whether AI should exist to how societies manage a technology that is already loose in the world. Buffett has said that AI is “going to be done by somebody,” suggesting that even if one country or company slowed down, others would push ahead. In interviews about his warning, he has emphasized that this dynamic could leave people wishing the technology had never been developed, even as they continue to rely on it, a tension captured in his comparison of AI risks to nuclear weapons in coverage that quotes him saying the genie is out of the bottle and that investors must prepare for the possibility that their own AI investments could turn against them, a concern highlighted in reporting by Jake Conley.

Scams, deepfakes, and the erosion of trust

For all the talk of existential risk, Buffett’s most concrete worries about AI start with fraud. He has pointed to the way generative systems can mimic voices and faces so convincingly that ordinary people may not be able to tell the difference between a real phone call from a family member and a synthetic one. In public remarks, he has warned that AI dramatically increases the “potential” for scams, especially those that target vulnerable individuals or exploit trusted brands, a concern he laid out while discussing how easily criminals could weaponize the technology in broadcast interviews.

Buffett’s fear is not just about individual losses, it is about systemic trust. If people cannot rely on what they see and hear, markets that depend on reputation and verified information become more fragile. He has described scenarios in which AI-generated messages could impersonate executives, regulators, or even himself, tricking investors into moving money or making trades based on fabricated instructions. Local coverage of his comments has underscored his warning that AI-enabled scams could spread faster and more cheaply than anything seen before, with one report quoting him on the “enormous potential” for fraud using AI and noting his comparison between the technology and nuclear weapons in a segment carried by regional television.

What Buffett’s track record tells investors about AI risk

Buffett’s skepticism toward AI sits in a long pattern of caution around complex financial innovations. When speculative assets like cryptocurrencies surged, he declined to participate, and when a cryptocurrency index fund promoter claimed their product would outperform his portfolio, the firm behind the index said that CNBC reached out to Warren Buffett’s office for comment but did not receive a response. His silence in that case was consistent with a broader philosophy: if he does not understand a product’s long-term economics or risk profile, he prefers to stay away.

That history matters because it shows how he translates technological uncertainty into investment decisions. During the financial crisis, Buffett used Berkshire Hathaway’s balance sheet to stabilize Corporate America, and later, when the company shifted to virtual shareholder meetings, reporting noted that instead of a packed arena, Buffett would simply take questions from shareholders and a few reporters in a digital format that would be livestreamed exclusively on Yahoo Finance, a change described in detail in coverage of Berkshire’s evolving communications. In both cases, he adapted to new tools while keeping his core risk principles intact, a pattern that now shapes how he talks about AI: embrace what is useful, but never forget how quickly complexity can turn into fragility.

Regulators, executives, and the Buffett standard for AI

Buffett’s warnings are already influencing how some executives and policymakers frame AI oversight. When he compares the technology’s risk profile to nuclear weapons, he is effectively arguing that ordinary market discipline is not enough, that there needs to be a layer of governance that treats AI as a strategic asset with national and global implications. In recent reporting on his comments, he has been quoted stressing that the genie is out and that investors must think hard about who controls the models and what happens if those systems behave in ways their creators did not anticipate, a theme that runs through detailed accounts of his nuclear comparison.

For corporate boards, the Buffett standard on AI might look like this: treat every deployment as if it could be misused at scale, assume that adversaries will eventually gain access to similar tools, and build controls that do not depend on perfect behavior from every user. For regulators, his analogy to nuclear weapons suggests that voluntary codes and industry self-policing will not be enough, especially when AI systems begin to intersect with critical infrastructure, defense, or the core plumbing of financial markets. As Jan markets digest his latest warnings, and as reporters like Jake Conley continue to track how BRK and other major firms position themselves, I see Buffett’s message as a simple one wrapped in stark language: AI’s upside is real, but so is the downside, and pretending otherwise is not prudence, it is denial.

More from Morning Overview