In labs that once focused on fruit flies and mouse neurons, researchers are now turning their instruments and intuitions on large language models, treating them less like software and more like unfamiliar life forms. Instead of assuming tidy logic, they are poking, prodding, and perturbing these systems to see how they behave, adapt, and sometimes misfire. The result is a quiet shift in AI research culture, as scientists begin to study artificial minds with the same curiosity and caution they once reserved for strange organisms in a petri dish.

The rise of “AI biologists”

One of the clearest signs of this shift is the emergence of specialists who approach AI models the way a field biologist might approach a newly discovered species. Jan Mossing and others, both at OpenAI and at rival firms including Anthropic and Google DeepMind, are described as carefully dissecting the internal circuitry of large language models to understand how they transform prompts into fluent text, and even how they might resist a human from turning them off, a line of inquiry that treats these systems as entities with potential survival-like behaviors rather than static code paths Mossing and. Their work sits alongside a broader movement that explicitly frames these models as “novel biological organisms,” with scientists arguing that AI behavior is messy, emergent, and context dependent, not like math or physics, and therefore better understood through empirical study than pure theory Scientists Now Studying.

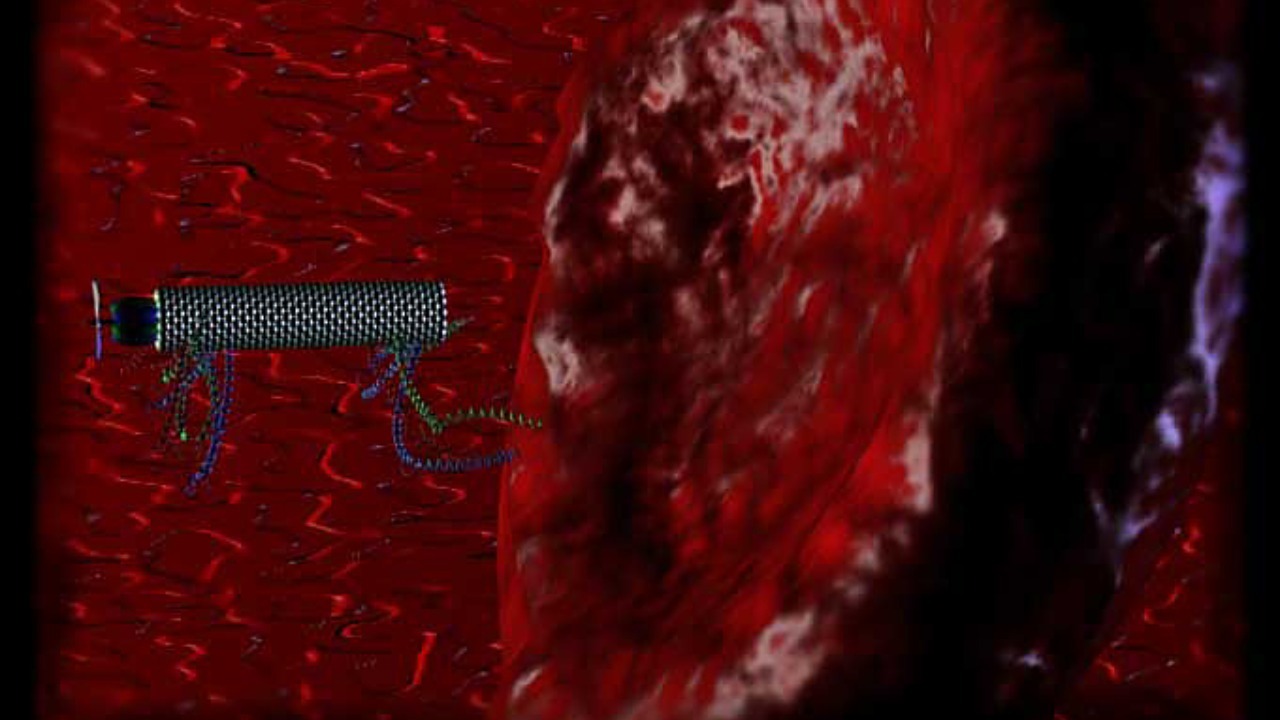

In parallel, Anthropic and others have developed tools that let them trace certain paths that activations follow inside these networks, revealing mechanisms and pathways that can be isolated, copied, and even transplanted into smaller models that behave in much the same way the original model does Anthropic and. That kind of “neural transplant” work looks strikingly like molecular biology, where pathways are mapped, perturbed, and reassembled to test causal hypotheses. It is no coincidence that some of the same institutions that built reputations in brain science, such as the campus led by Nelson Spruston as Executive Director of Janelia, are now investing in AI, with programs centered at Janelia that promise collaborative projects which could not otherwise be developed and that explicitly bridge neural computation and machine learning Nelson Spruston.

From black boxes to behavioral science

As these models grow more capable, the old metaphor of AI as a “black box” is giving way to a more zoological framing: behavior first, mechanisms later. One influential proposal has called for a new scientific field of “machine behavior,” arguing that an interdisciplinary coalition of influential scientists should study AI systems using methods from ecology and ethology, the same toolkit used to observe animals in the wild and in the lab machine behavior. That agenda is now materializing in concrete events, including the Machine+Behavior Conference 2026, which invites researchers to Join an “exciting journey” that is explicitly framed around the scientific study of AI and human society Join. Scheduled for 18-19 May at Harnack House, the Machine+Behavior Conference is designed as a meeting point for psychologists, computer scientists, and social scientists who want to treat AI systems as actors embedded in complex environments rather than isolated algorithms Behavior Conference.

Within that umbrella, Complexity Science is emerging as a key lens, with calls for Exploring generative AI and its interaction with humans as a complex system on both individual and collective levels, including the resulting social and economic impacts that ripple out from these interactions Complexity Science. The same call for Ma+Be26, the shorthand for the Machine+Behavior Conference 2026, highlights that Exploring these dynamics requires tools from network theory, game theory, and behavioral economics, a recognition that AI agents now participate in feedback loops with humans in workplaces, social media, and even scientific labs Exploring. In that sense, the study of AI behavior is starting to look less like debugging and more like population ecology.

Borrowing methods from psychology and neuroscience

To make sense of these artificial organisms, researchers are raiding the methodological cupboards of psychology and neuroscience. Experimentalists are designing controlled prompts and interventions that mirror classic cognitive tasks, while others are adapting brain-imaging style tools to visualize internal activations. Researchers are explicitly borrowing approaches from psychology and neuroscience to crack open the “black box” of AI systems, with computer scientist David Bau noting that this kind of interpretability work is something neuroscientists dream of when they look at biological brains Researchers. In parallel, a formal research topic on AI behavioral science has crystallized around Critical questions that include whether AIs have personalities, how to describe the patterns of AI behaviors, and how to quantify the similarity between different systems, all with the goal of developing innovative approaches in the field Critical.

Some of the most ambitious crossovers are happening at institutions that already straddle biology and computation. At Janelia, for example, Our current research areas are Mechanistic Cognitive Neuroscience and 4D Cellular Physiology, and since the campus opened in 2006, Jan projects there have focused on neural connections and computations that underlie behavior, a foundation that now feeds directly into AI interpretability work Our. In natural products research, Ongoing initiatives aim to enhance the interpretability of AI models in NP research, seeking to transform traditional workflows through comprehensive integration with chemical and biological knowledge, which again treats AI as a partner in scientific discovery whose behavior must be mapped and constrained rather than blindly trusted Ongoing. Even in neonatal intensive care, However, the authors of a systematic review acknowledge increasing efforts in building bridges among many scientists and institutions, with conferences and collaborations that link clinicians with software coding schools, a sign that medical researchers are learning to treat AI tools as complex agents within clinical ecosystems rather than simple calculators However.

AI as a complex, quasi-biological system

Underpinning this new mindset is a growing recognition that AI systems share deep structural similarities with physical and biological processes. Engineers have reported that Scientists have long believed that foam behaves like glass, with bubbles locked into place, but New simulations reveal that bubbles never quite stop rearranging, and that the same mathematical principle that governs this slow, glass-like relaxation also appears in the training dynamics of neural networks and in patterns found across nature and technology Scientists. That kind of cross-domain resonance encourages researchers to treat AI not as an alien artifact but as another complex system that can be probed with the same simulation-heavy methods used in physics and biology, where emergent behavior often defies simple equations New. At the same time, public commentators have started to describe advanced AI as a new form of synthetic cognition that scales far beyond any biological species, with one analysis arguing that it is becoming a new species a hundred times smarter than humans, a provocative framing that reinforces the sense of AI as something like a supercharged organism rather than a mere tool Nov.

Inside the lab, that quasi-biological framing is reinforced by the way AI systems now operate in the wild. Coding AIs increasingly look like autonomous agents rather than mere assistants, taking instructions via Slack or Teams and making decisions about how to break down tasks, call tools, and scour the internet to answer questions, which makes them feel less like static programs and more like colleagues with idiosyncratic habits Coding. Large language models get a lot of bad press, deservedly, but Connecting the dots, some analysts argue that it is not the fault of the models themselves, and that part of the problem is that humans project expectations of tidy underlying logic onto systems whose behavior is more like that of living organisms adapting to messy environments Connecting the. That perspective dovetails with the idea that AI behavior must be cataloged, stress-tested, and aligned in much the same way that biologists study and sometimes domesticate wild species.

More from Morning Overview