Quantum physicists have found a way to let a single qubit experience several different “journeys” through time at once, effectively bending a classic limit on how fast quantum systems are allowed to evolve. Instead of marching along one fixed timeline, the qubit’s evolution is split into a superposition of time paths, then recombined to squeeze out extra performance that standard theory once treated as off limits. The result is a glimpse of quantum hardware that does not just run more operations per second, but uses time itself as a resource.

That shift arrives as quantum engineers are already scaling machines to thousands of qubits and pushing them to operate at room temperature, while theorists refine the fundamental “speed limits” that govern how quickly any quantum state can change. Taken together, these advances suggest that the next generation of quantum computers will not simply be faster versions of today’s prototypes, but qualitatively different devices that exploit superposition, interference and tunneling in ways classical intuition struggles to follow.

What physicists mean by a quantum “speed limit”

Before a qubit can bend the rules, I need to be clear about what those rules are. In quantum theory, the so‑called quantum speed limit is not a marketing slogan, it is a mathematical bound on how quickly a system can evolve from one state to another given its energy. This idea is closely tied to Bremermann‘s limit, which sets an upper bound on the rate at which information can be processed in any physical system, and it translates in practice into a maximum clock rate for quantum logic operations.

In more concrete terms, the speed limit shows up as a cap on how fast a qubit can be flipped or rotated while still remaining coherent and controllable. Earlier work on trapped ions and other platforms treated this as a hard ceiling, with the rate of flipping effectively defining the quantum processor’s clock speed and constraining how quickly a quantum computer can calculate in general, as described in detailed Mar analysis of these bounds. The new time‑superposition experiments do not violate the underlying equations, but they do show that clever control over a qubit’s trajectory through time can make that ceiling far more flexible than it once appeared.

How a qubit travels along multiple time paths at once

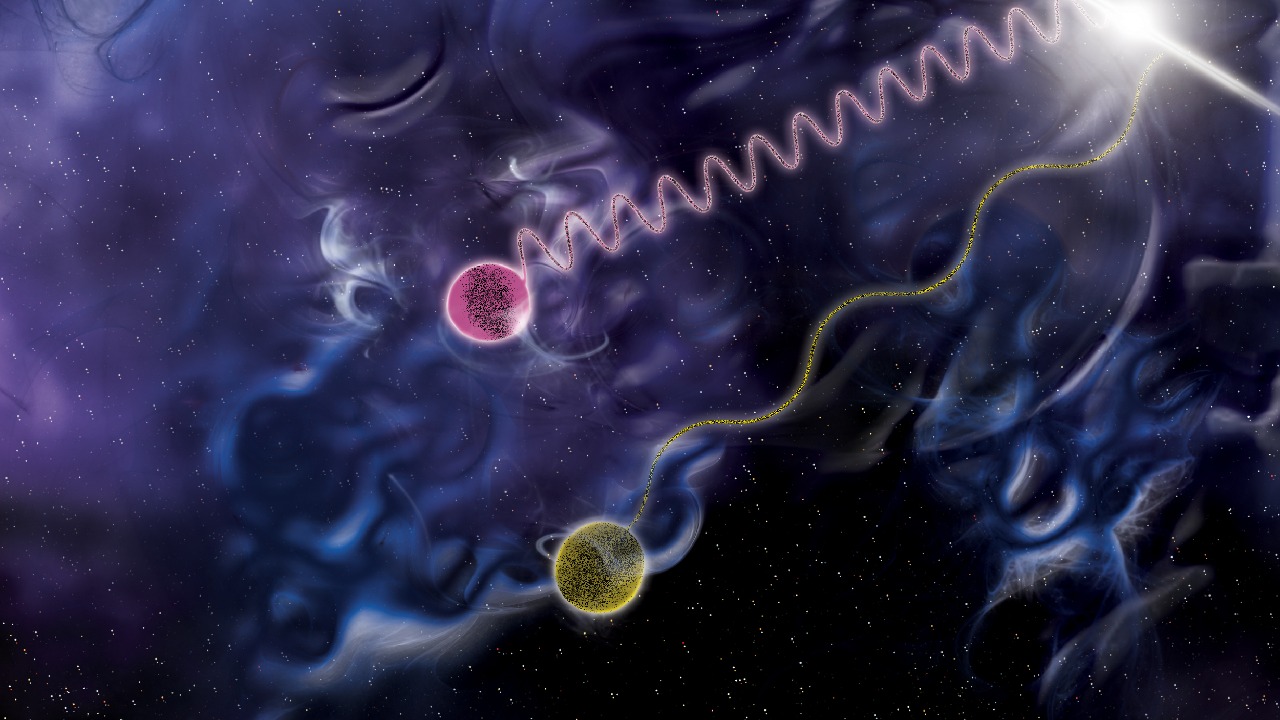

The key trick in the latest work is to let a qubit evolve under a superposition of different time histories instead of a single, continuous one. In the reported experiment, researchers prepared a quantum system so that it effectively experienced several distinct durations of evolution in parallel, then interfered those histories to amplify the outcome that corresponded to a faster‑than‑usual transformation. According to Dec, this approach allowed the qubit to behave as if it had evolved for up to five times longer than usual, without actually spending that much physical time exposed to noise.

What makes this so striking is that the qubit is not simply being driven harder or clocked faster in the classical sense. Instead, the system is placed into a carefully engineered superposition where one branch of its wavefunction evolves for a short time, another for a longer time, and so on, with all of those branches coexisting until they are recombined. When those time paths interfere, the experimenters can arrange the phases so that the desired final state is enhanced and the unwanted ones cancel out, effectively letting the qubit “sample” multiple temporal routes and keep only the most useful result.

Superposition as the engine of quantum speed

This ability to explore many time paths at once is a direct extension of the same superposition that already lets qubits represent multiple logical states simultaneously. In a classical bit, a transistor in a 2024 MacBook Pro or a 2025 gaming PC is either 0 or 1 at any instant, but a qubit can be in a blend of 0 and 1, with probabilities that only crystallize when it is measured. That property is what allows quantum algorithms to evaluate many possibilities in parallel and then use interference to steer toward the right answer, as explained in depth in discussions of Inherent Parallelism In quantum computing.

When I look at the time‑superposition experiment through that lens, it becomes less like a loophole and more like a natural next step. Instead of using superposition only across logical states, the researchers are using it across durations, letting the qubit inhabit multiple “how long have I been evolving?” answers at once. That same logic underpins broader explanations of how quantum machines can tackle certain problems at speeds unattainable by classical systems, with quantum superposition singled out as the principle that lets qubits explore many computational pathways in parallel.

Interference, not brute force, bends the limit

Crucially, the experiment does not rely on brute force to push the qubit faster, it relies on interference to make some time paths count more than others. In quantum mechanics, interference is the process by which overlapping wavefunctions reinforce or cancel each other, and it is the same phenomenon that produces the bright and dark fringes in a double‑slit experiment. As one researcher put it, interference is a way to leverage the wavy character of a system to highlight differences between waves, a description that captures why it is so central to quantum algorithms and appears prominently in Interference focused discussions of quantum speed limits.

By arranging the phases of the different time branches just right, the team can make the branch that corresponds to a more advanced evolution interfere constructively, while branches that lag behind interfere destructively and effectively vanish from the final measurement statistics. From my perspective, that is the real conceptual leap: the speed limit is not being smashed by pumping more energy into the qubit, but by choreographing its wavefunction so that the most “efficient” temporal trajectory dominates. It is a reminder that in quantum computing, performance gains often come from smarter use of interference rather than from raw hardware muscle.

Scaling up: from single qubits to 6,000‑qubit machines

Any claim that a single qubit can bend a speed limit naturally raises the question of what happens when thousands of qubits are involved. Earlier this year, researchers reported a record‑setting system with 6,000 qubits built from paired neutral atoms, held in a programmable array that operated at room temperature instead of the cryogenic conditions that dominate today’s superconducting devices. In the experiment, they used those paired neutral atoms as the quantum bits in a system and extended coherence times from a few seconds to 12.6 seconds, a leap that, according to In the report, represents a significant step toward practical quantum processors that do not need temperatures close to absolute zero.

Longer coherence times and higher qubit counts are exactly what a time‑superposition technique would need in order to matter for real workloads. If a single qubit can effectively experience five times more useful evolution without five times more exposure to noise, then a 6,000‑qubit array with multi‑second coherence could, in principle, run deeper circuits or more complex algorithms within the same physical time budget. The challenge, as I see it, is that coordinating interference across thousands of time‑branched qubits will demand exquisite control electronics and calibration, but the hardware trend lines suggest that such control is moving from fantasy toward engineering problem.

Why classical speed analogies only go so far

It is tempting to describe these advances in terms of clock speeds and gigahertz, but that analogy quickly breaks down. In a classical CPU, the clock simply ticks, and each tick advances the machine by one step in a deterministic sequence of instructions. Quantum processors, by contrast, use gates that rotate qubits through the Bloch sphere, and the effective “speed” of a computation depends not just on how fast those gates are applied, but on how cleverly superposition and interference are arranged. As one widely shared explainer on Quantum computing puts it, a quantum computer with enough qubits and the right algorithm can process information on a fundamentally different level from conventional computers, because it manipulates probability amplitudes rather than just bits.

That is why I find the time‑superposition result more interesting as a conceptual shift than as a raw benchmark. It suggests that the relevant question is not “how many operations per second can this chip perform?” but “how much useful evolution can this quantum state undergo before decoherence ruins the computation?” Techniques that stretch that useful evolution, whether by better materials, smarter error correction or superposed time paths, all attack the same bottleneck from different angles. The classical metaphor of a speedometer simply does not capture that multidimensional race.

Macroscopic tunneling and new quantum pathways

The time‑bending experiment also fits into a broader pattern of physicists finding new ways to exploit quantum pathways that classical physics would forbid. The 2025 Nobel recognition for work on macroscopic quantum tunneling highlighted how quantum systems can transition between states by effectively “tunneling” through energy barriers instead of climbing over them. The very mechanism of quantum tunneling that the laureates demonstrated now allows qubits to transition between states along nonclassical pathways, enabling computations impossible for classical systems, as detailed in analyses of macroscopic tunneling in quantum devices.

When I put tunneling and time superposition side by side, a common theme emerges: quantum engineers are no longer content to accept the most straightforward route from input to output. Instead, they are deliberately steering systems through exotic regions of Hilbert space, whether that means slipping through barriers or weaving together multiple temporal branches, to reach final states that would be inaccessible or prohibitively slow in a classical framework. That mindset is likely to shape the next wave of algorithm design, where the focus shifts from mimicking classical logic to fully embracing the strange geometry of quantum state space.

Quantum speed limits in a curved spacetime

There is an even more speculative frontier where these ideas intersect with gravity. Recent theoretical work on quantum networks has explored what happens when atomic clocks are placed in superpositions across different locations, effectively letting them sample different gravitational potentials at once. The study showed that if atomic clocks are placed in superpositions across different locations using quantum networks, their ticking can reveal how quantum effects and the curvature of spacetime interact, a result that has been highlighted in research on how atomic clocks might one day help bend space‑time for communication.

If time itself becomes a quantum resource that can be superposed and interfered with, then the notion of a universal quantum speed limit may need to be refined to account for curved spacetime and relativistic effects. I do not mean that the basic bounds vanish, but that the effective rate at which a quantum computer can process information could depend on how its qubits are distributed in a gravitational field and how their time paths are entangled. The time‑superposition experiments on single qubits are still far from that regime, yet they hint at a future in which quantum information science and general relativity are no longer separate conversations.

Two speed limits, many levers

Even within flat spacetime, theorists have argued that quantum computers face not one but two distinct speed limits. One is the familiar bound on how fast a state can evolve, tied to energy and encapsulated in the quantum speed limit. The other arises from how quickly interference patterns can be established and read out, which depends on the structure of the algorithm and the connectivity of the qubits. Analyses of these dual constraints, such as those summarized in Jan discussions of quantum speed, emphasize that both the wavy character of the system and the architecture of the processor shape how fast useful computation can occur.

From that perspective, the time‑superposition experiment is a clever way of loosening one of those constraints without touching the other. By letting a qubit evolve along multiple time paths and then interfering them, the researchers effectively increase the amount of evolution per unit of physical time, but they do not change the fundamental requirement that interference patterns must be set up and measured correctly. As quantum hardware scales, I expect to see more such techniques that target specific bottlenecks, whether by improving connectivity, enhancing coherence, or, as in this case, rethinking what “time” means for a quantum state.

What this means for the race to quantum advantage

For companies racing to demonstrate clear quantum advantage over classical supercomputers, any method that stretches the useful evolution of qubits is enticing. The core promise of quantum computing is that, with enough qubits and the right algorithms, certain tasks such as factoring large numbers, simulating complex molecules or optimizing logistics networks can be solved at speeds unattainable by classical systems. That promise rests on the ability of qubits to exist in multiple states simultaneously and to use interference to zero in on the right answer, a dynamic that is at the heart of explanations of quantum speed as the secret behind these machines.

If a qubit can effectively experience more evolution in the same wall‑clock time by traveling multiple time paths at once, then algorithms that were previously limited by decoherence might become viable on near‑term hardware. I do not expect this single technique to suddenly make today’s noisy devices outperform the best classical clusters on every benchmark, but it adds another lever for engineers to pull as they try to close that gap. In a field where every extra layer of depth in a quantum circuit can unlock qualitatively new algorithms, bending the temporal rules even slightly could have outsized impact.

Supporting sources: The Science Behind the 2025 Nobel Discovery: Macroscopic Quantum Tunneling.

More from MorningOverview