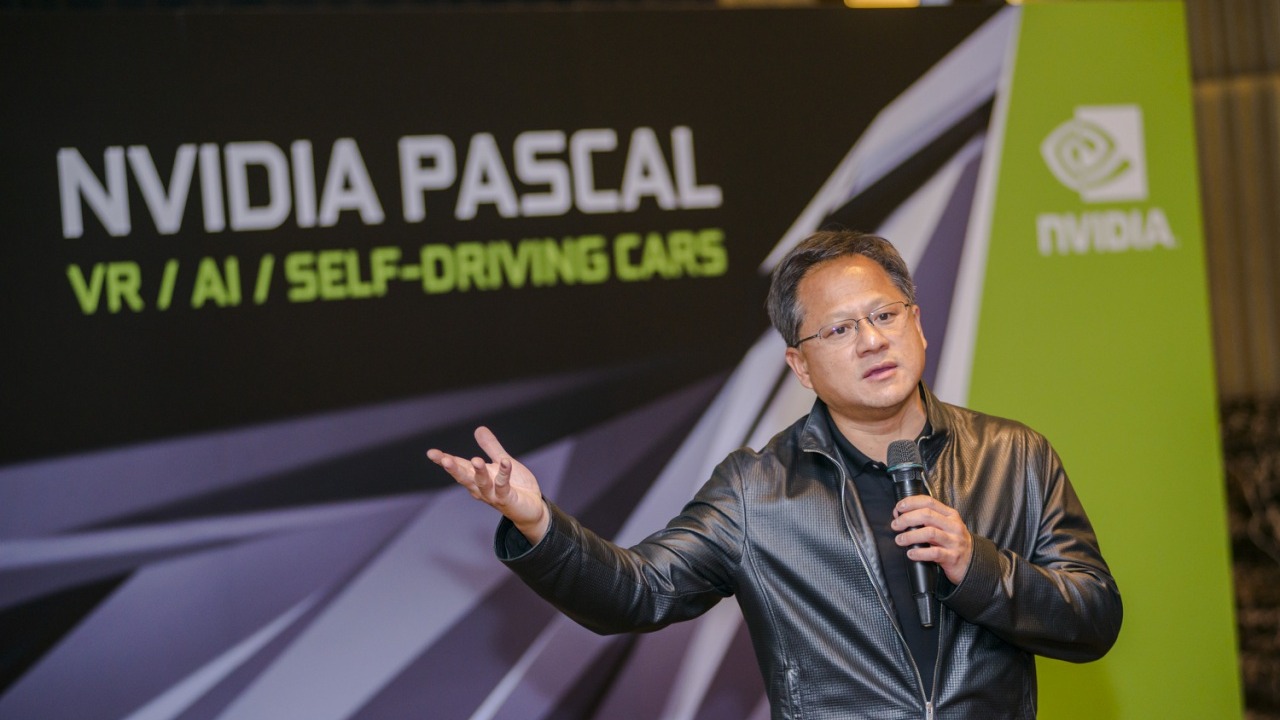

Nvidia’s Jensen Huang has turned a vivid comparison into a policy argument. In his telling, the United States can design the world’s most advanced AI chips, but China can pour concrete and wire up power so quickly that it feels like it can build a hospital in a weekend while American projects crawl along for years. His warning is not about who has the smartest researchers, it is about whether the physical and regulatory foundations of AI can keep up with the technology itself.

By contrasting years-long timelines for U.S. data centers with the breakneck pace of Chinese construction, Huang is forcing Washington and Silicon Valley to confront an uncomfortable split screen. One side shows a country that leads in semiconductors and software, the other a rival that can mobilize land, labor, and energy at industrial scale. The outcome of that tension will shape who actually captures the value of the AI boom.

The hospital-in-a-weekend line and what Huang really meant

When I look at Huang’s now famous remark, I see less of a compliment to China and more of a critique of American process. Nvidia’s CEO has said that building a major AI data center in the United States can take around three years from start to finish, while in China authorities can move fast enough to complete entire hospitals in a single weekend. In his framing, the comparison is meant to dramatize how permitting, environmental reviews, and grid connections stretch out U.S. timelines even when the chips and software are ready to go, turning bureaucracy into a strategic liability rather than a safeguard.

Huang’s point has been repeated across formats, including a widely shared clip in which he stresses that constructing large AI facilities in the U.S. is a multi‑year slog while Chinese projects can be authorized and built at staggering speed. In that discussion, he describes how long approval chains and infrastructure upgrades slow American builds, even as he underscores that Nvidia, often styled as NVIDIA, still sits at the center of the global AI stack. The hospital metaphor is doing rhetorical work, but it is grounded in a real disparity between how quickly each system can turn policy decisions into steel, silicon, and power on the ground.

Three-year data centers versus China’s speed advantage

Behind the soundbite is a more technical concern about infrastructure cadence. Huang has explained that in the United States, large AI data centers typically take about three years to construct, from site selection and design through to commissioning and grid integration. Even smaller facilities can be delayed by land-use fights and utility bottlenecks, which means that the availability of compute is increasingly constrained by how fast concrete is poured and transformers are installed rather than by how quickly Nvidia can tape out a new GPU. That lag, he argues, is already shaping the geography of AI investment.

By contrast, he describes a Chinese system that can approve and build large projects at a pace the U.S. “cannot” match, with local governments and state-linked developers able to mobilize labor and materials in compressed timeframes. In his conversations about the AI race, Huang has warned that this speed advantage in data center construction and power hookups could let Chinese operators scale capacity more quickly, even if they are buying many of their chips from Nvidia itself. The result is a paradox: the U.S. leads in the core technology, but its slower build cycle risks ceding operational scale to a rival that can stand up facilities in a fraction of the time.

Energy, regulation, and the structural gap

Huang’s critique goes beyond construction permits and into the wiring of the power grid. He has argued that China has structural advantages across transmission and generation, with the ability to bring new energy resources online and connect them to AI campuses more rapidly than U.S. utilities can manage. In his view, the United States risks Risks Trailing China in AI if it does not reform how it plans and approves new power lines and generation, because the workloads that drive modern models demand massive, constant electricity. The bottleneck is no longer just chip supply, it is whether the grid can keep up with the appetite of clusters that run around the clock.

That theme has surfaced in public forums where Huang has spoken about the AI race. In one discussion highlighted by The MES Times, the Nvidia CEO Jensen Huang described China’s speed advantage in AI infrastructure as a function of both construction capacity and energy planning, noting that the country can align local approvals, financing, and grid connections in a way that compresses project timelines. He contrasts that with a U.S. system where overlapping jurisdictions and litigation can stall even well‑funded projects, leaving developers with land and hardware but no power. For Huang, that is not an abstract policy flaw, it is a concrete reason why the U.S. could fall behind in deploying the very technologies it invents.

“China will win” walk-back and the five-layer AI stack

Huang’s bluntness has already forced him to clarify his language. He initially told one interviewer that Jensen Huang believed China could effectively win the AI race, a remark that sparked political backlash and questions about whether Nvidia was betting on Beijing over Washington. He later softened that stance, explaining that his concern was not about inevitability but about trajectory, and that his comments were meant as a warning to U.S. policymakers rather than a prediction of American decline. The nuance matters, because it frames his hospital comparison as a call to action rather than a concession speech.

That tension is echoed in other reporting that describes how Nvidia CEO Jensen if the U.S. does not address its structural disadvantages. He has also laid out what he calls a five‑layer “cake” of AI, starting with energy at the base, then chips, infrastructure, models, and applications on top. In a separate explanation shared on social media, he is quoted as saying that the United States is still generations ahead in chips and software, and that it is way ahead in open source, but that those strengths will not be enough if the bottom layers are neglected. That framing, captured in a clip where an analyst reminds viewers that NVIDIA CEO Jensen is the one making the argument, turns the hospital metaphor into part of a broader theory of how AI power is built.

America’s chip lead and the race to fix the bottlenecks

For all his warnings, Huang is careful to emphasize that the United States still holds a commanding lead where it matters most today. Nvidia’s GPUs and software platforms underpin nearly every frontier AI model, and he has said that the company is “generations ahead” of rivals in the advanced systems that power modern AI. That message is reinforced in analyses that describe how, despite infrastructure challenges, Maintaining the semiconductor advantage remains central to U.S. strategy. In other words, the chip race is not lost, but it can be undermined if the rest of the stack fails to keep pace.

At the same time, Huang has not shied away from describing how that lead could erode. He has warned that if China can keep building capacity at its current clip, it could outpace the U.S. in AI infrastructure even while buying many of its accelerators from Nvidia. One report notes him saying that While the U.S. retains an edge on AI chips, China can build large projects at speeds that change the competitive balance. Another account quotes him more starkly, with Nvidia CEO saying directly that data centers take about three years to construct in the U.S. while in China they can build a hospital in a weekend. The message is consistent: unless the U.S. fixes its bottlenecks, its technological lead will sit on a fragile foundation.

More from Morning Overview