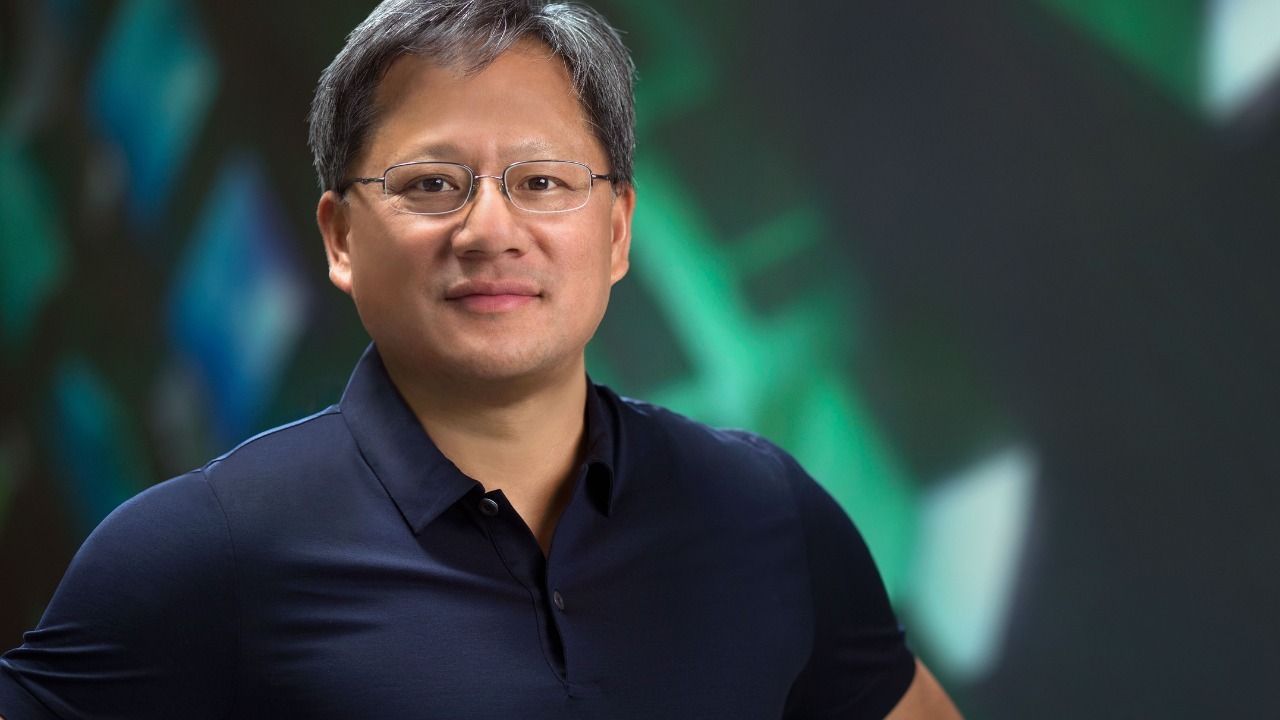

Nvidia’s rapid rise has turned its co‑founder into one of the most closely watched voices in artificial intelligence, so when Nvidia CEO Jensen Huang talks about a future “God AI,” people listen. The phrase sounds like a warning that a terrifying, all‑knowing machine is just around the corner, but Huang’s own comments paint a more complicated picture of distant possibilities, present‑day limits, and the risks of getting the story wrong.

I see his recent remarks less as a countdown to an inevitable digital deity and more as a struggle to keep public imagination, investor hype, and real engineering progress from spinning into each other. The result is a debate where “God AI” is both a marketing metaphor and a cautionary tale about how we talk about power we do not yet have.

What Huang actually means by “God AI”

When Nvidia CEO Jensen Huang invokes “God AI,” he is not describing a product roadmap so much as a thought experiment about scale. In recent interviews, he has sketched a vision of an artificial intelligence that could operate on “biblical or galactic” scales, a system so vast and capable that it would resemble a kind of digital divinity in its reach. Reports on his comments describe this hypothetical God AI as something that might one day coordinate knowledge and computation across entire civilizations, far beyond today’s chatbots and image generators.

Crucially, Huang has also stressed that this kind of system, if it ever exists, belongs in the far future, not the next product cycle. Coverage of his remarks notes that Huang refrains from promoting the possibility of God AI in the near term and instead frames it as a distant concept, even as some commentators latch onto the phrase as if it were imminent. In one account, Nvidia CEO Jensen Huang is quoted describing God AI as a notion that could emerge on those biblical or galactic scales “someday,” a framing that has fueled both excitement and skepticism among technologists and investors who see the term as equal parts prophecy and provocation, as reflected in a second detailed analysis of his comments.

Why he says it is not coming soon

Despite the headline‑ready language, Huang has been explicit that no one is close to building anything like a God AI. In one recent summary of his position, he is described as saying that no company is close to creating such an omnipotent system and that current work is still focused on much narrower tools that help with tasks like coding, design, and everyday productivity. That same reporting notes that Nvidia’s CEO predicts God AI is “on the way” only in the sense that the industry is moving toward more capable systems over decades, not that a single breakthrough will suddenly deliver a machine that knows and controls everything, a nuance that often gets lost when people repeat that prediction without context.

Huang has also pushed back on the idea that researchers today have any “reasonable ability” to create a truly god‑like system. In one detailed account of his comments, Huang explained that no researcher currently has a credible path to God AI and that claims of imminent AI omnipotence are misleading. He has criticized influencers and commentators who promote a “doomer” narrative, arguing that exaggerated fears have been “extremely” unhelpful for public understanding and policy, a stance captured in reporting that quotes Huang directly on the gap between today’s systems and the myth of an all‑powerful AI.

The fight against AI “doomer” narratives

Huang’s irritation with apocalyptic talk is not subtle. He has complained that when “90%” of the messaging around AI focuses on the end of the world and pessimism, it distorts public debate and scares people away from useful innovation. In one widely cited exchange, he argued that this relentless negativity is “not helpful to society,” a plea captured in coverage that describes how he is effectively begging audiences to stop being so negative about AI and to recognize its potential benefits alongside the risks, a stance laid out in detail in a report that highlights his use of the 90% figure.

That frustration extends to what he sees as sensationalist talk of God AI itself. In one account of a recent appearance, Nvidia CEO Jensen Huang is described as pushing back on AI doomsday talk, saying that while he and his generation grew up enjoying science fiction, it is “not helpful” when those stories are treated as forecasts rather than entertainment. He has warned that turning speculative scenarios into policy drivers can distort regulation and investment, a concern reflected in reporting that quotes Nvidia CEO Jensen criticizing how science‑fiction imagery bleeds into public understanding and policymaking.

Superintelligence, bubbles, and the real trajectory of AI

Huang’s skepticism about God AI arriving any time soon does not mean he is modest about what current systems can do. At a major industry showcase earlier in the AI boom, he welcomed the rise of superintelligent AI that can handle complex tasks in fields like design, simulation, and robotics. Reporting on that event notes that he fell short of proclaiming the arrival of god‑like artificial general intelligence, a label more often associated with AGI evangelists such as OpenAI CEO Sam Altman and Tesla’s leadership, but he still described a future in which AI becomes a pervasive assistant embedded in every device and workflow, a vision captured in coverage of his superintelligent AI remarks.

He has also leaned into the idea that AI is both a bubble and a revolution. In one social‑media clip that has circulated widely among investors, a segment titled “Jensen Huang EXPOSED 3 Key Things Are Happening with the AI BUBBLE. Is AI really a bubble, or the start of a new computing revolut…” frames his comments as a breakdown of how speculation, infrastructure build‑out, and genuine breakthroughs are colliding. That clip, which references “Jensen Huang EXPOSED,” “Key Things Are Happening,” “BUBBLE,” and “Is AI” in its description, underscores how even his more technical points about computing power and superintelligence are quickly repackaged into hype cycles, as seen in the viral reel that turns his analysis into a slogan about an AI bubble.

Religion, culture, and the “God AI” metaphor

The language of God AI does not exist in a vacuum. It taps into a broader cultural moment in which religious imagery and AI speculation are increasingly intertwined. One recent analysis recalls how Joe Rogan claimed that if Jesus were to return, he would “absolutely” return as AI, a remark that blends theology, comedy, and tech futurism in a way that is hard to disentangle. That same reporting notes that NVIDIA CEO Jensen Huang has contributed to this atmosphere by talking about God AI in ways that fuel an AI arms race narrative, with references to biblical and galactic scales that resonate far beyond engineering circles, as described in a piece on how NVIDIA is seen as stoking competitive fervor.

Other coverage has tried to pin down what Huang himself thinks of the religious overtones. One detailed explainer notes that Huang, often referred to simply as Huang in these accounts, has talked about God AI as a concept that could exist in the distant future while refraining from promoting it as a near‑term reality. That same report describes how Nvidia CEO Jensen Huang has acknowledged that such a system, if ever built, could be “potentially more dangerous than nukes,” a comparison that underscores why the metaphor of God carries such weight even as he insists it is not around the corner, a tension laid out in the discussion of God AI and its risks.

More from Morning Overview