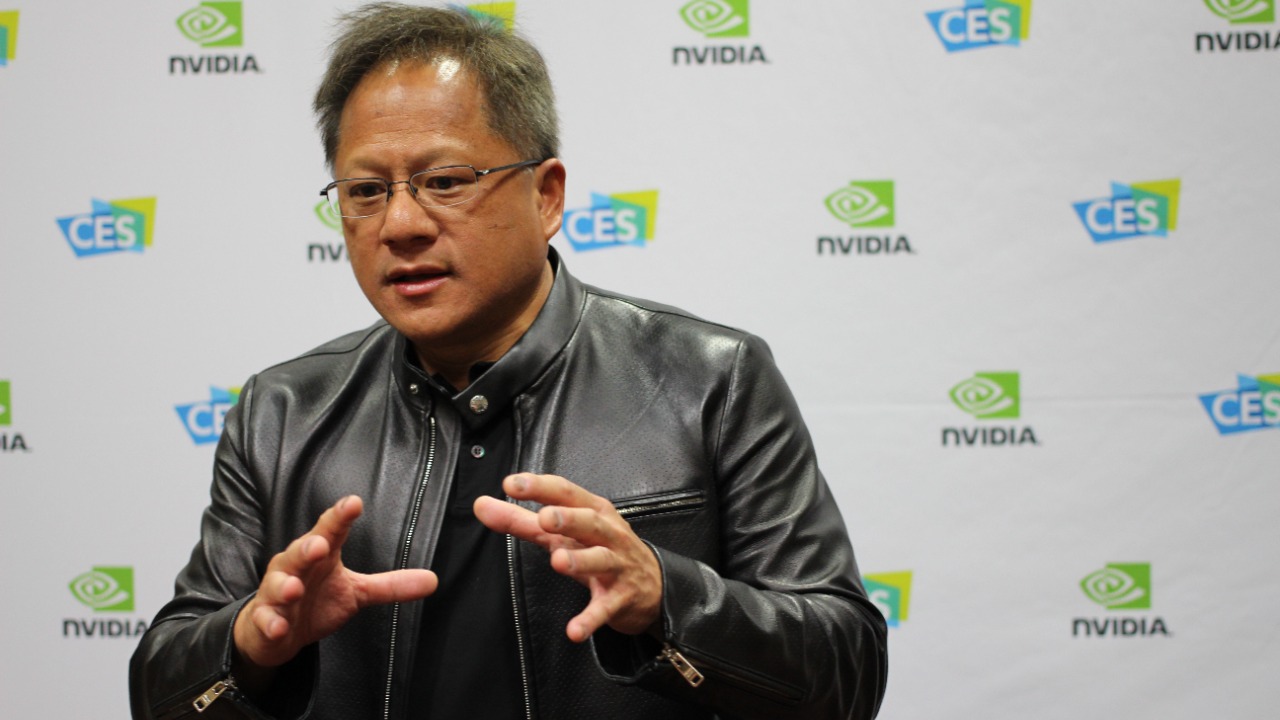

Nvidia’s chief executive has spent years hyping the power of artificial intelligence, but his latest remarks landed more like a sermon than a sales pitch. Speaking around the company’s flagship events and media appearances, Jensen Huang sketched a future where a so‑called “God AI” exists on “biblical or galactic” timescales, even as he insisted that today’s systems are far from that level and that the real disruption will be felt in jobs and industry long before anything resembling a deity‑like machine appears. The result is a rare moment when Silicon Valley’s most influential chipmaker is trying to cool both apocalyptic fear and utopian fantasy at the same time.

Huang’s warning rattled the Valley because it came from the person whose hardware underpins almost every major AI model in production. When the architect of the current boom says the ultimate version of the technology is both unimaginably distant and widely misunderstood, it forces founders, investors, and policymakers to reassess what they are actually building and how quickly it will reshape work, regulation, and power.

The “God AI” that lives on a biblical clock

Huang’s most arresting phrase is his suggestion that some form of “God AI” could exist someday, but only on a timeline he describes as “biblical or galactic.” In other words, he is telling an industry obsessed with quarterly product cycles that the kind of all‑knowing, all‑powerful system that animates science fiction is not coming next year, or even this decade. In his view, the gap between today’s large language models and that imagined endpoint is so vast that treating them as steps on a smooth curve is misleading, a point he underscored when he said that “God AI” may exist someday but “not next week, next year, or even this decade,” a framing captured in his comments on God AI.

At the same time, he has been careful to keep that notion grounded in present‑day engineering reality. In another appearance, NVIDIA, CEO, Jensen Huang reiterated that while he believes some kind of “God AI” could be on the horizon in a very long‑term sense, the current state of the art is nowhere near that level of autonomy or understanding, a nuance reflected in his discussion of God AI as a distant possibility rather than an imminent product roadmap. By stretching the timeline out to cosmic scales, he is trying to puncture both the hype of those who promise near‑term superintelligence and the fear of those who see current chatbots as the first step toward an unstoppable overlord.

From myth to “extremely hurtful” doomer talk

Huang has not stopped at abstract metaphors. He has also labeled the popular narrative that AI will inevitably end the world as not just wrong but actively damaging. Nvidia CEO Jensen Huang has criticised what he calls a growing “doomer” narrative around artificial intelligence, arguing that constant talk of catastrophe distorts public understanding and makes it harder to have a rational debate about how the technology should be developed and deployed responsibly, a stance he laid out when he pushed back on “AI will end the world” stories in Nvidia CEO Jensen coverage. In his telling, the loudest voices predicting extinction are crowding out more practical conversations about safety, robustness, and accountability.

On the No Priors podcast, Nvidia CEO Jensen Huang went further, describing the “God AI” concept itself as a myth and calling the doomer narrative “extremely hurtful” to the industry’s ability to attract talent and investment. He argued that influential commentators and some researchers have exaggerated both the capabilities and the risks of current systems, creating a feedback loop of fear that does not match the underlying science, a critique that surfaced in detailed reporting on his claim that “God AI” is a myth and that this narrative “just does not exist,” as captured in Jensen Huang. For Huang, the danger is not that people are too cautious, but that they are so spooked by speculative scenarios that they miss the concrete ways AI is already reshaping industries.

“Don’t be a doomer”: a Silicon Valley pep talk

That frustration has turned into a kind of pep talk for the tech sector. In one widely discussed exchange, he urged audiences to “stop being so negative” about AI, insisting that the technology will improve lives in “some way” even as it introduces new challenges. The message, captured in coverage under the banner “Nvidia CEO Says Everyone Should Stop Being So Negative About AI,” is that relentless doom and gloom risks paralyzing innovation at the very moment when the tools are finally good enough to be useful at scale, a sentiment that came through clearly in his call to dial back the pessimism in Doom and Gloom coverage.

He has sharpened that line with a simple slogan: “Don’t be a doomer.” In his view, no one currently has “any reasonable ability to create God AI,” and treating that as a near‑term risk distracts from the real work of making current systems reliable, secure, and broadly accessible. He has argued that the idea that a single omnipotent system is about to emerge is unrealistic today, and that energy would be better spent on incremental progress in areas like healthcare, logistics, and creative tools, a perspective laid out in detail in his plea to Don the industry’s anxiety. For founders and engineers in Silicon Valley, that is both reassurance and marching orders: keep building, but keep your expectations tethered to what the chips can actually do.

A “biblical-scale” reality check on jobs and power

Huang’s biblical language is not only about distant superintelligence. He has also delivered what one account described as a “biblical‑scale reality check” on the current AI boom itself, telling investors and partners that the industry is “nowhere close” to the ultimate version of the technology that some evangelists describe. In a conversation highlighted by Jan, Moz Farooque and others, he bluntly acknowledged that expectations for near‑term breakthroughs are far ahead of reality, even as Nvidia’s valuation and the ticker symbol NVDA have become shorthand for AI exuberance, a tension that surfaced in detailed reporting on his biblical-scale remarks.

Where he is far less cautious is in predicting the impact on work. In another interview, Nvidia CEO Jensen Huang Warns that “Everyone’s Job Will be Affected by AI,” while adding that he hopes it will “Enhance” most jobs, not destroy them. He has framed AI as a general‑purpose technology that will touch every sector, from software engineering to manufacturing and media, and has argued that the real policy challenge is managing that transition so that workers benefit from productivity gains instead of being left behind, a theme that runs through his warning that every Job Will feel the effects. For Silicon Valley, that is both an opportunity to sell new tools and a responsibility to help retrain the people whose workflows those tools will upend.

Why Huang thinks more AI, not less, makes society safer

Huang’s answer to those concerns is not to slow down, but to invest more. He has argued that pouring more money into the AI landscape actually makes society safer, because it accelerates the development of better safety techniques, more robust infrastructure, and a broader base of expertise. In one interview, Huang, By Jose Enrico, explained that he believes that continued funding and experimentation will help the industry discover and fix problems faster, rather than allowing a small group of actors to monopolize powerful systems in secret, a logic he laid out when he urged people to stop talking only about AI’s potential harms and noted that “50” is a key figure in his argument about scale in Published coverage.

More from Morning Overview