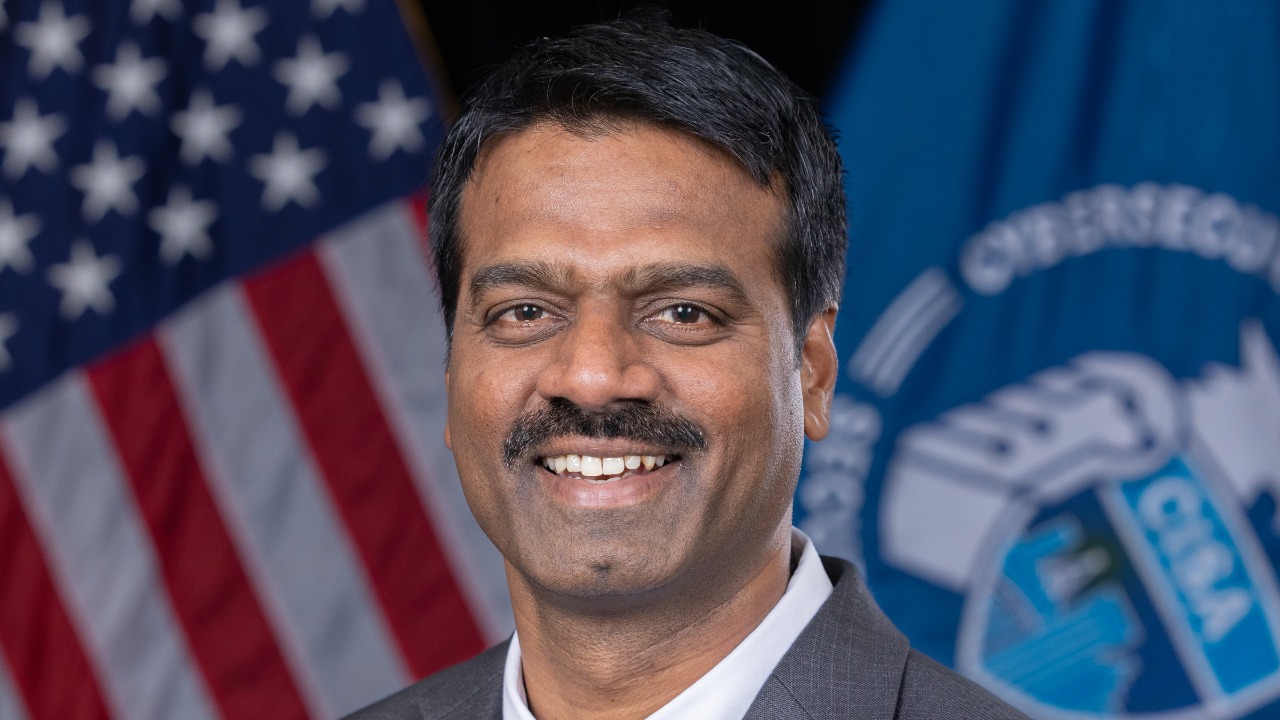

The acting head of America’s top cyber defense agency is under scrutiny after feeding sensitive internal documents into a public artificial intelligence chatbot. Dr. Madhu Gottumukkala, President Trump’s interim appointee to lead the Cybersecurity and Infrastructure Security Agency, is accused of uploading “for official use only” material into ChatGPT, raising alarms about data exposure and basic judgment. The episode has quickly become a test of how the United States manages powerful AI tools inside its own most security‑conscious offices.

At stake is not only whether any particular file was compromised, but whether the government’s own cyber chief followed the rules he was supposed to enforce. The controversy has triggered internal reviews, congressional attention, and a broader debate over how far senior officials can lean on commercial AI systems when handling sensitive work.

How the cyber chief ended up pasting sensitive files into ChatGPT

According to multiple accounts, the trouble began when Dr. Madhu Gottumukkala, serving as CISA’s interim head, used the public version of ChatGPT to process internal documents that were explicitly labeled “for official use only.” Reporting describes how the CISA leader uploaded sensitive material into the chatbot, apparently seeking help with drafting or analysis, even though the files were not cleared for public release. One detailed account notes that CISA’s interim head fed these restricted documents into the tool, prompting immediate concerns over judgment and governance inside the agency charged with defending federal networks.

Those concerns were amplified by the fact that the uploads did not occur in a vacuum. Cybersecurity sensors at CISA itself reportedly detected the activity, flagging that the acting director’s account was sending multiple files to an external AI service. One account describes how cybersecurity sensors at picked up the uploads over the summer, with one official specifying that there were multiple documents involved. That the agency’s own monitoring tools had to catch its leader underscores how the incident cuts against the culture of caution CISA has tried to build.

The Trump connection and a rare AI exception at CISA

The political context matters here because Dr. Madhu Gottumukkala is not just any civil servant, but President Trump’s acting cybersecurity chief. He serves as acting director of the Cybersecurity and Infrastructure Security Agency, a role that puts him at the center of federal efforts to protect critical infrastructure and election systems. Profiles of Madhu Gottumukkala emphasize that he leads the Cybersecurity and Infrastructure Security Agency at a time when foreign adversaries and criminal groups are probing U.S. systems daily. That makes his personal technology choices, and his adherence to internal rules, a matter of national security rather than mere office policy.

What makes the ChatGPT uploads even more striking is that Gottumukkala had reportedly been granted a special waiver to use the tool in the first place. While most CISA staff were blocked from using public AI services by default, he received an exception earlier in his tenure as director. One account notes that Gottumukkala was reportedly to use ChatGPT at a time when other employees were barred from accessing such tools, particularly for anything touching classified information. That carve‑out was supposed to enable innovation at the top, but it now looks like a case study in how special permissions can backfire if guardrails are not enforced.

What was in the files and how exposed they might be

Officials have not publicly listed every document that went into ChatGPT, but the descriptions that have emerged are specific enough to raise eyebrows. The files are described as “for official use only,” a designation that falls below formal classification but still signals that the material is sensitive and not meant for public distribution. Reporting on the uploads indicates that the acting director pushed multiple internal documents into the chatbot, including material that would normally be handled only on secured systems. One detailed account of sensitive “for official stresses that they were never intended to leave government‑controlled environments.

The core risk is that once such documents are uploaded to a public AI service, the government loses control over how that data is stored, processed, or potentially reused. Commercial AI providers typically retain some user inputs for model training or security monitoring, which means the content can be replicated across internal systems outside federal oversight. Accounts of the incident note that sensitive files were uploaded to ChatGPT in a way that could have enabled unauthorized retention or disclosure of government material. Even if no adversary has yet accessed the data, the mere possibility that it now resides in external systems is enough to trigger serious incident‑response procedures.

Internal fallout, congressional pressure, and the “46” question

The revelation that the nation’s cyber defense chief mishandled sensitive information has already sparked internal reviews and political blowback. Lawmakers on Capitol Hill have demanded briefings on how the uploads were allowed to happen and what steps are being taken to contain any damage. One account of the episode, framed around the fact that the US Cyber Defense, notes that House members are already probing whether staff felt unable to raise concerns earlier due to fear of retaliation. That same account highlights the figure “46,” a reminder that even a single misjudgment by a senior official can ripple across dozens of systems, offices, or individuals who now have to help clean up the mess.

Inside the executive branch, the incident has reportedly triggered a formal review of how AI tools are approved and monitored for senior officials. The fact that the acting director had a special waiver has become a focal point, with critics arguing that the process for granting such exceptions was too informal and lacked clear enforcement mechanisms. One report on the controversy, framed as Cyber Defense Agency, describes staff who were reluctant to challenge the director’s use of ChatGPT even as they worried about the implications. That dynamic, where subordinates hesitate to question the boss on security practices, is exactly what robust governance is supposed to prevent.

What this reveals about AI governance inside the federal government

For all the focus on one official’s actions, the episode exposes a broader gap in how the federal government is managing generative AI. Agencies like the Cybersecurity and Infrastructure Security Agency are racing to harness tools like ChatGPT for tasks ranging from code review to policy drafting, yet their policies often lag behind the technology. The fact that interim head of could upload sensitive files into a public chatbot, even with a waiver, suggests that existing rules are not granular enough about what kinds of content are off‑limits and how usage should be logged.

The incident also highlights the tension between innovation and risk in high‑stakes environments. Senior officials often want to experiment with new tools to move faster, especially under pressure from the White House to modernize government operations. Yet the same tools can create new attack surfaces if they are not deployed in tightly controlled ways. Accounts of the controversy, including one that describes how Trump’s Cyber Chief an external AI service, make clear that the line between acceptable experimentation and reckless exposure is still being drawn in real time. Until that line is clearer, the government’s own cyber leaders will remain under pressure to prove that they can use AI without putting the systems they defend at risk.

More from Morning Overview