Engineers at MIT have turned one of computing’s biggest headaches, waste heat, into the main act. By sculpting “dust-sized” silicon structures that steer heat as precisely as electrical current, they have shown that thermal energy can perform matrix math with up to 99% accuracy, a level that starts to look practical rather than purely experimental. The work hints at a future in which the heat bleeding off chips in data centers, smartphones, and cars is not just managed but recruited as a parallel computing resource.

Instead of relying on transistors switching on and off, these devices encode numbers in temperature differences and let heat flow through carefully designed pathways. I see this as a radical reframing of what a chip is for: not just a component that must stay cool at all costs, but a micro-scale landscape where electrical and thermal computation can coexist, and in some cases, where heat alone can do the job.

How heat became a computing signal

The core idea behind MIT’s approach is deceptively simple: treat heat like a signal that can be routed, combined, and read out, just as engineers already do with voltage. Instead of logic gates, the researchers build tiny silicon structures whose geometry controls how thermal energy moves from one side to another. When a temperature pattern representing an input matrix is applied, the structure’s internal design transforms that pattern into a new temperature distribution that encodes the result of the calculation. In other words, the chip’s shape itself becomes the algorithm.

To prove that this was more than a thought experiment, the team used a carefully engineered technique to turn waste heat into a functional processing medium. In their tests, these heat-powered silicon chips performed mathematical operations with up to 99% accuracy, a figure that rivals early analog electronics and some low-precision digital accelerators. The fact that the devices are fabricated in silicon, rather than exotic materials, means they can in principle sit alongside conventional microelectronics and tap into the same waste heat that current chips struggle to dissipate.

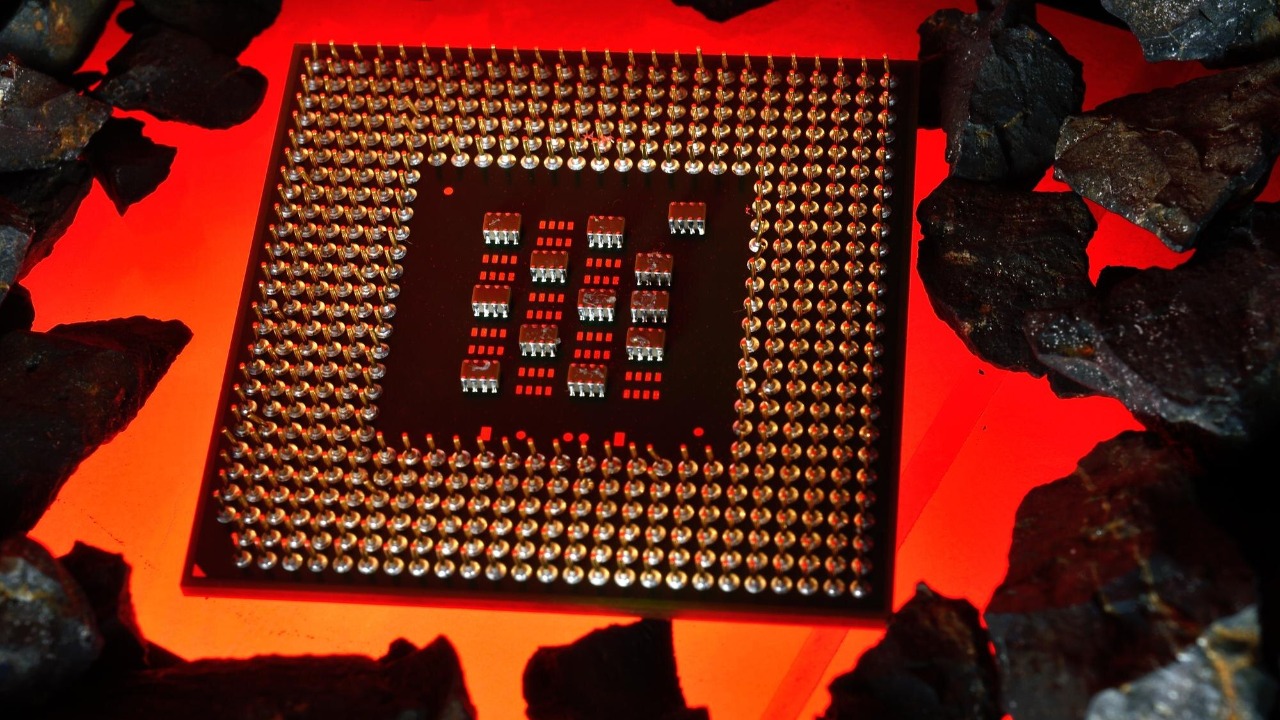

Inside the “dust-sized” silicon structures

At the heart of the work are Tiny silicon structures, small enough to be described as dust-sized, that are patterned with intricate channels and regions to guide heat. Each structure is designed so that when one side is warmed in a specific pattern, the resulting temperature field on the other side corresponds to the output of a matrix multiplication. Because matrix operations underpin everything from 3D graphics to neural networks, even a single, well-tuned thermal block can stand in for a surprisingly wide range of workloads.

In practice, the researchers treat each structure as a physical implementation of a particular matrix. When an input vector is encoded as a set of temperature differences, the heat flow through the silicon performs the multiplication in one shot, rather than stepping through a sequence of digital instructions. Reporting on these Tiny devices notes that the resulting matrix multiplication reached 99% accurate outputs in many cases, underscoring that the thermal signal can be controlled with surprising precision despite the chaotic reputation of heat at small scales.

From simulation to silicon

Before committing to fabrication, the MIT team leaned heavily on simulation to explore which shapes and layouts would yield reliable thermal behavior. They modeled how heat would diffuse through candidate structures when exposed to different input patterns, then iteratively refined the geometry until the simulated outputs matched the target matrix operations. This virtual design loop let them test many more configurations than would ever be practical to build in a clean room, and it also revealed how sensitive the computation would be to manufacturing imperfections.

Those simulation efforts focused on relatively small problems, such as simple matrices with two or three columns, which are easier to analyze and verify. By starting with these constrained cases, the researchers could show that their Microelectronic structures behaved as intended and that the same design principles could, in theory, scale to larger arrays. The group’s own account of these Microelectronic applications emphasizes that the same simulation framework can be extended to more complex matrices that would be relevant for deep learning and other data-intensive tasks, even if those larger designs have not yet been fully realized in hardware.

Why 99% accuracy matters

In digital computing, anything less than perfect accuracy sounds like a bug, but in many modern workloads, especially in artificial intelligence, a small amount of numerical error is acceptable. What makes MIT’s result striking is that their heat-based matrix multiplication reaches up to 99% accuracy while relying on inherently analog thermal processes. That level of fidelity is high enough for many inference tasks in neural networks, where models already tolerate quantization and approximation in exchange for speed and efficiency.

The team’s own reporting on these structures notes that the devices achieved percent accuracy in many cases when benchmarked against ideal mathematical outputs. In other words, the thermal computation did not just vaguely resemble the right answer, it closely tracked it across a range of test inputs. A separate account of the work highlights that the percent accuracy was measured systematically, not cherry-picked from a single favorable example. For applications like sensor fusion in autonomous vehicles or on-device AI in smartphones, where power budgets are tight and some error is tolerable, a 99% accurate thermal accelerator could be a compelling trade.

What heat-powered chips could change

If this approach matures, I expect it to reshape how engineers think about thermal management in everything from data centers to wearables. Instead of designing elaborate cooling systems solely to remove heat, chip architects could carve out regions of silicon that intentionally harvest temperature gradients for computation. In a server packed with GPUs, for example, dedicated thermal blocks might sit near the hottest components, quietly performing matrix operations that would otherwise consume extra electrical power. The same logic could apply in electric vehicles, where power electronics and battery packs generate steady heat that today is mostly wasted.

There is also a strategic angle for organizations that already invest heavily in AI hardware. A lab like the MIT-IBM Watson AI Lab, which is mentioned in coverage of these MIT chips, has a direct interest in architectures that can stretch performance per watt. If thermal computing blocks can be integrated into standard silicon processes, they could complement digital accelerators rather than replace them, offloading specific matrix operations that map cleanly onto heat flow. The fact that the current prototypes already reach 99% accuracy suggests that the concept has cleared an important threshold, even if significant engineering work remains before it can be deployed in commercial systems.

More from Morning Overview