Artificial intelligence is colliding with an older, more fragile technology: the electric grid that keeps the lights on. As Nvidia’s chief executive warns that AI’s hunger for power could overwhelm that system, the cost of keeping data centers humming is already showing up on household bills. The same buildout that is turning Nvidia into a cornerstone of the digital economy is now forcing a reckoning over who pays for the electricity that makes it all possible.

Behind the alarmist language about AI “wrecking” the grid is a simple equation: unprecedented computing demand piled onto infrastructure that was never designed for it. I see a widening gap between how quickly companies like Nvidia can deploy new chips and how slowly utilities can add new generation, transmission, and substations. That mismatch is why the warnings from Nvidia’s own leadership matter, and why your next power bill is increasingly part of the AI story.

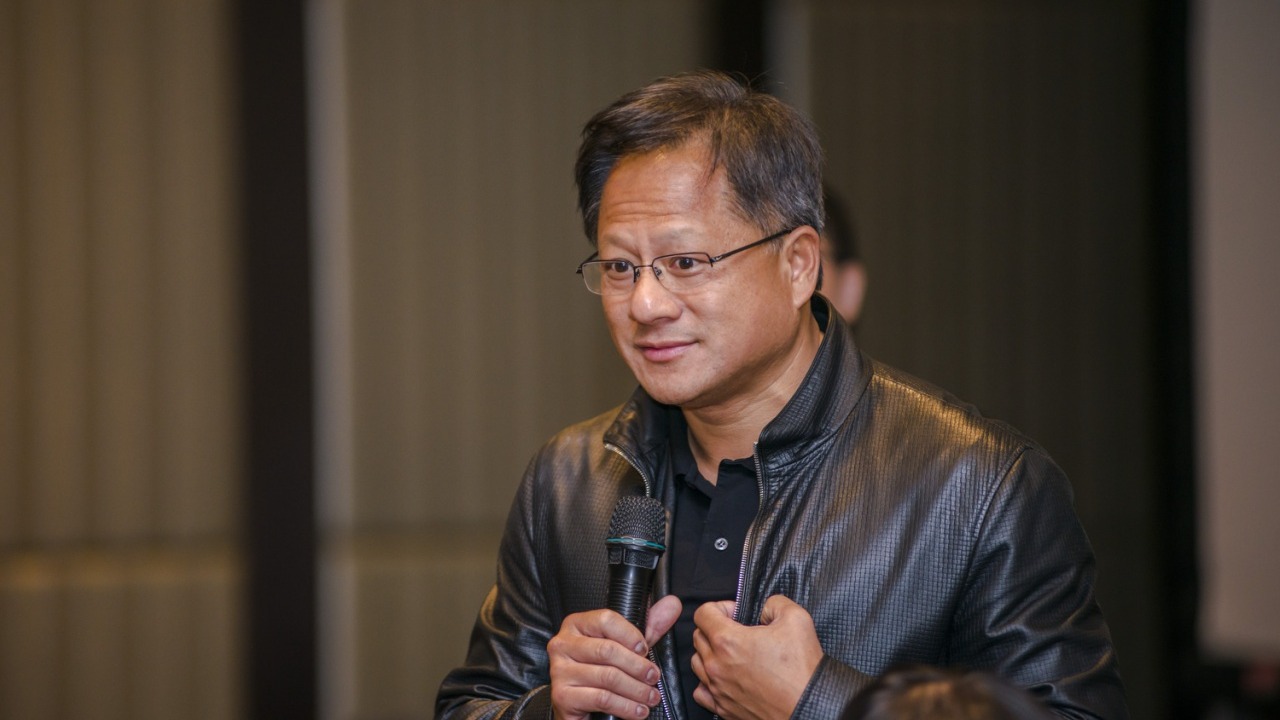

Why Nvidia’s CEO is suddenly talking like a grid operator

When the Nvidia CEO sounds more like a utility planner than a chip designer, it is because the company’s growth now depends on the availability of cheap, reliable electricity. In recent remarks highlighted in a Jan report, the Nvidia CEO warned that power grids cannot handle AI’s current trajectory, arguing that the foundation of abundant, low-cost electricity that underpinned the internet era is now “crumbling.” The message is blunt: if the grid does not scale, the AI boom will hit a hard ceiling, and the strain will show up first in higher prices and more frequent constraints on new projects.

That warning is not abstract. The same Power focused coverage ties the surge in AI data centers directly to rising electricity costs, noting that facilities packed with Nvidia hardware are getting harder to power within existing grid limits. I read that as a rare moment of candor from a company that usually celebrates demand: the CEO is effectively admitting that the physical world, not chip supply, is now the binding constraint on AI growth.

AI’s power appetite and your rising bill

The impact of that constraint is already visible in consumer bills. A viral clip citing the York Times notes that Americans are paying electricity bills that are 30% higher than they were five years ago, with AI singled out as a major driver. The logic is straightforward: every time a user spins up a large language model or image generator, they are tapping into data centers that draw enormous amounts of power, and utilities recover the cost of serving those loads from the entire customer base.

Another explainer on how AI affects household costs points out that tools like Chat GPT depend on massive server farms that must run around the clock, even when demand is spiky and unpredictable. I see a feedback loop forming: as more services embed AI by default, from search engines to productivity apps, the baseline energy use of the digital economy rises, and utilities respond with new infrastructure investments that regulators often allow them to recover through higher rates. The result is that even customers who rarely use AI end up subsidizing those who do.

The largest infrastructure buildout in history, starting with energy

Nvidia’s leadership is not just warning about the grid, it is also framing AI as the catalyst for a historic construction boom. In a recent interview, the company’s chief described the global economy as a $100 trillion ecosystem of industries, and suggested that “something like $20-somewhat trillion” of that could be reshaped by AI infrastructure. That figure is not limited to chips and servers. It includes power plants, transmission lines, substations, and cooling systems that must be built or upgraded to keep pace with AI demand.

In a separate appearance, the CEO, identified there as Jensen Wong, told an audience in Davos at the World Economic Forum The CEO of the world’s most valuable company that the AI buildout still needs trillions in additional investment. I read that as both a boast and a warning. For Nvidia, those trillions represent future revenue. For utilities and regulators, they represent a daunting capital plan that will have to be financed through a mix of corporate spending, public investment, and, inevitably, higher charges for end users.

Energy as AI’s “first layer” and the nuclear temptation

Inside the industry, there is growing recognition that energy is not a side issue but the base of the AI stack. In one detailed account, the Nvidia CEO is quoted saying that Energy is the “first layer” of AI buildout, the physical backbone that must be in place before any model can train or infer. As artificial intelligence accelerates, attention is shifting from software breakthroughs to the concrete realities of power plants, grid connections, and even on-site generation at data centers.

That shift is already changing how tech companies think about their own infrastructure. A profile titled Jensen Huang Reveals Biggest Problem, And It Is Not Chips, describes how the Nvidia chief now sees power, not semiconductors, as the main bottleneck, and notes that Joe Rogan Agrees This Is The Smartest way to solve it by backing data centers with their own nuclear reactors. I see that nuclear temptation as a sign of how far the conversation has moved: when the world’s leading AI chip supplier is openly musing about private reactors, it underscores just how strained the existing grid has become.

Can AI help fix the very grid it is straining?

There is a paradox at the heart of Nvidia’s message. On one hand, the company’s leader warns that AI could break the grid. On the other, he argues that the same technology can make the system cleaner and more efficient. In a detailed Dive Brief, the Nvidia CEO is quoted saying that despite growing concern about electricity demand, AI will help, not hinder, grid decarbonization. The fact of the matter, he argues, is that AI can optimize how power plants run, how batteries charge and discharge, and how flexible loads respond to price signals.

In the same analysis, Artificial intelligence is described as a tool that will make computing and data centers more efficient, potentially offsetting some of the raw increase in electricity use. I see a narrow path here. If AI is deployed aggressively inside the grid, from forecasting solar output to managing electric vehicle charging, it could free up capacity and reduce the need for fossil fuel plants. But that outcome is not guaranteed, and it will not erase the basic reality that more AI means more electrons flowing through wires that are already under stress.

More from Morning Overview