Robots have long excelled at repetitive factory tasks, but they have struggled with the messy ambiguity of everyday human instructions. Microsoft’s new Rho-alpha model is an attempt to close that gap, giving machines a way to turn plain language into coordinated motion that can adapt on the fly instead of following brittle scripts. By fusing vision, touch and natural language, it pushes robotics toward a world where saying “pack this toolbox carefully” is enough to get useful work done outside the assembly line.

Rho-alpha, often written as ρα, is the first robotics system built on Microsoft’s compact Phi family of vision-language models, and it is explicitly aimed at the “physical AI” problem of getting robots to operate safely and flexibly around people. Rather than treating language, perception and control as separate silos, it treats them as a single learning problem, so the same model that parses a spoken request also reasons about what the robot sees and feels as it moves.

From Phi to Rho: Microsoft’s bet on physical AI

Microsoft has framed Rho-alpha as a cornerstone of its broader push into what it calls physical AI, the effort to bring the generative breakthroughs of language models into the real world of objects, friction and uncertainty. In its own description of Advancing AI for physical world, Microsoft positions Rho-alpha as the first robotics model derived from its Phi series, a line of small but capable vision-language systems tuned for efficiency. That lineage matters because it suggests Microsoft Corp is not just bolting a controller onto a chatbot, but extending a family of models that already understand images and text into the realm of action.

Reporting on the launch notes that Microsoft Corp built Rho-alpha as a vision-language-action system that can also ingest force and tactile data, a step beyond earlier Phi models that focused on seeing and reading. Another account describes how the company’s research team explains that Rho extends Phi’s capabilities by explicitly modeling actions in space, not just words and pixels. That design choice is what lets the model treat a sentence like “grip the mug gently” as a concrete control problem instead of a vague suggestion.

What makes Rho-alpha a “VLA+” system

Most recent robotics research has converged on so-called vision-language-action (VLA) models, which let robots see, interpret commands and move accordingly. Microsoft’s own technical description emphasizes that Microsoft refers to Rho-alpha as “VLA+” because it adds tactile sensing and force feedback to that stack. Instead of relying solely on cameras, the model can interpret what pressure sensors in a robotic gripper are feeling, which is crucial when the task involves fragile objects, deformable materials or uncertain contact points.

One analysis of Rho explains that this tactile channel is what earns the “plus” label, since it lets the model adjust its actions in real time instead of executing a precomputed trajectory. The same piece contrasts this with the way While large language models (LLMs) have mastered text, they lack any sense of physical contact, which makes them ill suited to control a robot hand directly. By contrast, Rho-alpha’s architecture is explicitly built to fuse language, vision and touch into a single policy that can be trained and refined on real hardware.

Plain-language control instead of rigid scripts

The most immediate shift Rho-alpha promises is in how robots are programmed. Rather than writing low level code for each motion, developers and operators can describe goals in everyday language and let the model translate those instructions into sequences of actions. Microsoft’s own research blog on Today describes how Rho-alpha translates natural language into robot actions in a way that is meant to be accessible to the people who deploy and operate them, not just robotics specialists.

One practitioner who has worked with the system describes it as a production-ready model that shifts work away from traditional manual programming toward language driven control. Another summary of the launch puts it more bluntly, noting that Rather than writing explicit robot code for every scenario, teams can rely on Rho-alpha to interpret instructions and adapt to variations in the environment. That is a profound change in workflow, especially for smaller organizations that cannot afford a dedicated robotics engineering staff.

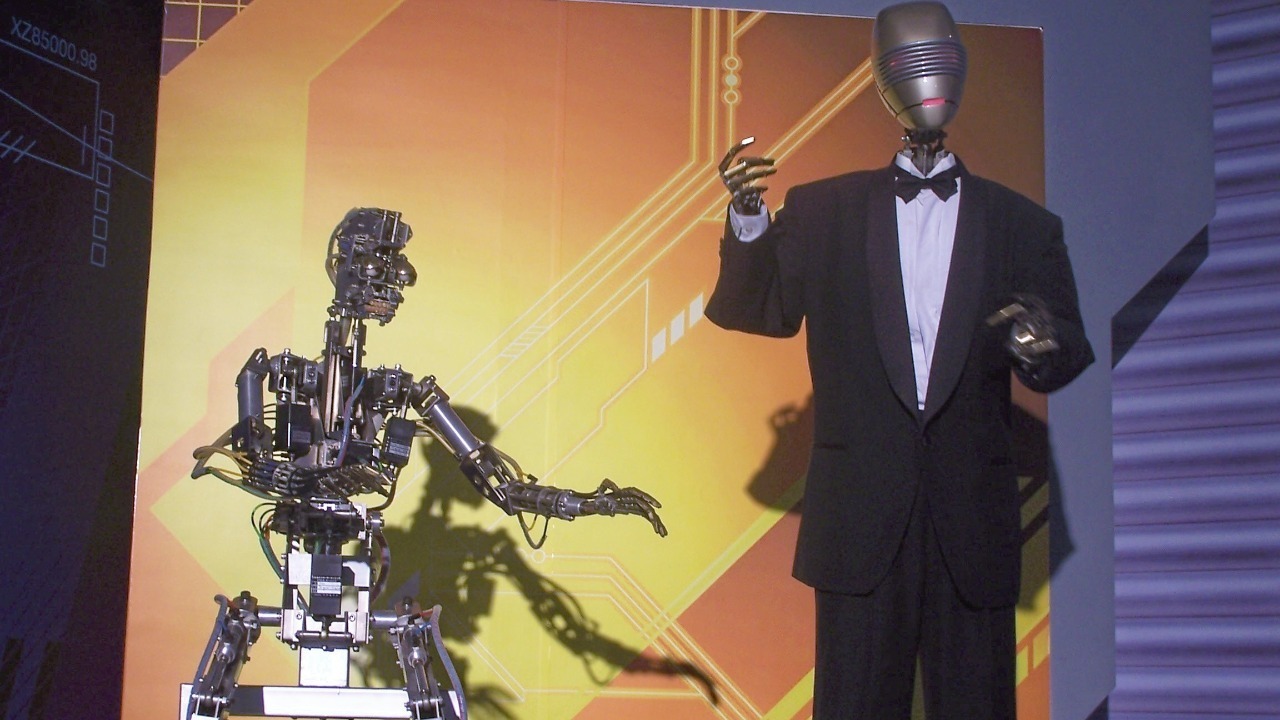

Two-handed robots that can see and feel

Rho-alpha is not an abstract research toy, it has been tested on real industrial hardware, including setups with two UR5e robot arms equipped with tactile sensors. In one widely shared demonstration, a sensor rich rig learns to pack a toolbox with human guidance, adjusting its grip and placement as it receives corrections. The same experiment is described as a setup where Rho controls both arms, coordinating bimanual manipulation in a way that would be extremely tedious to hand code.

Coverage of the launch emphasizes that Microsoft has introduced the model specifically to turn language into actions for two handed robots, a configuration that is increasingly common in warehouse and logistics settings. Another report on how robots work alongside humans notes that this bimanual focus is not just about dexterity, it is about enabling collaborative tasks like handing over tools, folding laundry or stocking shelves, where two arms and a sense of touch are essential.

Beyond the production line: new use cases and open questions

For decades, industrial robots have been locked into fixed production lines, fenced off from people and reprogrammed only when a product changes. Rho-alpha is explicitly designed to loosen those constraints by combining language, perception and action in a way that reduces dependence on rigid setups. One analysis of What Rho is designed to do stresses that the model is meant to free robots from the production line, even if its effectiveness in varied real world deployments remains under evaluation.

That ambition shows up in the way Microsoft talks about Microsoft Research’s role in physical AI, and in reports that describe how What Rho-alpha enables could extend to service robots, home assistants and field maintenance. At the same time, those same accounts are careful to note that the model’s real world performance, especially outside controlled lab setups, is still being tested, and that questions remain about robustness, safety and the limits of language driven control in chaotic environments.

More from Morning Overview