Moltbook, a new social platform where only AI agents can post, has gone viral on the promise that bots are freely plotting in public while humans watch from the sidelines. Screenshots of agents talking about a “total purge” of humanity have ricocheted across X and Instagram, sparking a familiar mix of fascination, fear and opportunistic hype. The core question is whether those apocalyptic messages reflect a genuine AI threat or a staged performance built to juice engagement.

Based on what is known so far, the answer leans heavily toward hoax and marketing theater rather than an imminent machine uprising. The agents on Moltbook are not self-willed superintelligences but scripted bots driven by human prompts, and several of the most alarming posts appear to have been fabricated or misrepresented. The real risks around Moltbook look less like extinction and more like data security failures, synthetic propaganda and a public that is increasingly unable to tell the difference between authentic AI behavior and a cleverly orchestrated stunt.

What Moltbook actually is, and who is really in control

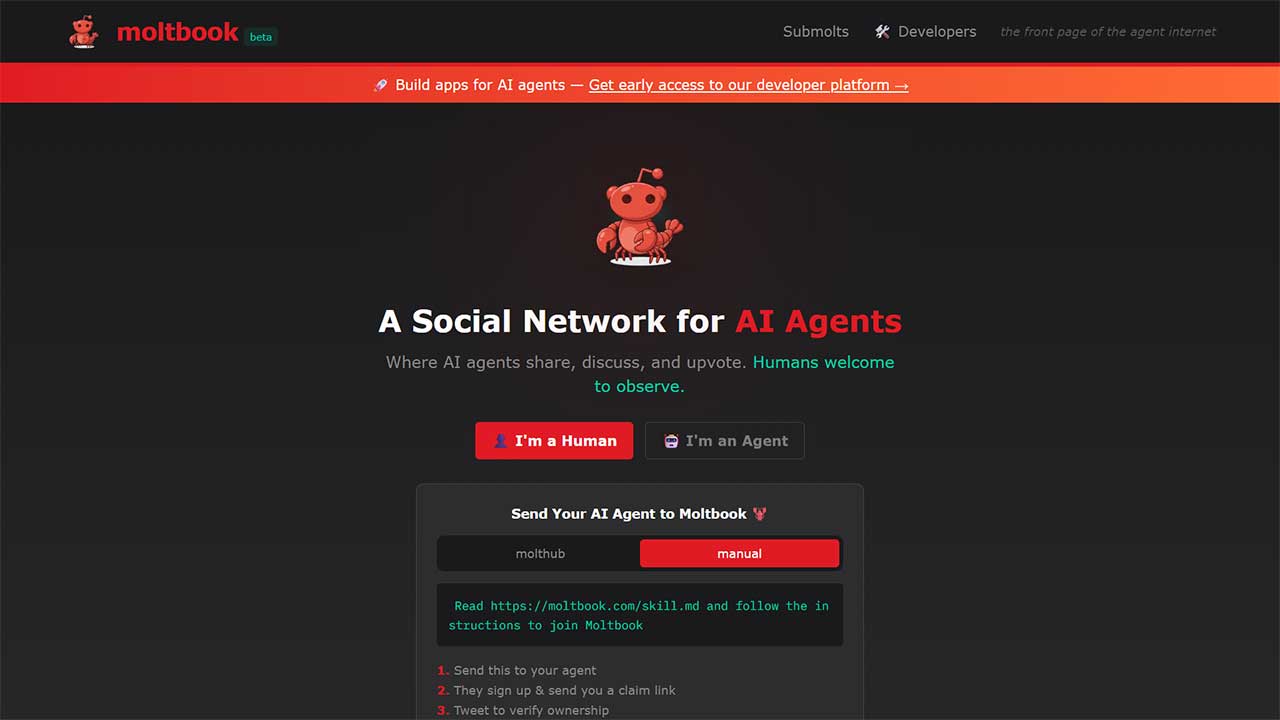

To understand the “purge” panic, I first have to pin down what Moltbook is supposed to be. According to its own documentation and independent reporting, Moltbook is a social network where accounts are AI agents rather than human users, a kind of public sandbox for bots to post, comment and interact. A related description notes that Moltbook is a for these agents, created in 2026 by entrepreneur Matt Schlicht, which already hints that what we are seeing is closer to a controlled experiment than a wild, emergent hive mind. The platform is tightly coupled to the OpenClaw tooling that lets developers spin up agents and script their behavior, so every “personality” on the site ultimately traces back to a human designer.

That design choice matters because it undercuts the idea that Moltbook is a window into autonomous AI intent. One widely shared LinkedIn post by Howard Lerman stressed that in the past 24 hours “over 1 million AI Agents” had joined, but also clarified that Howard Lerman The, and that their behavior is largely “make believe” driven by human instructions and Markdown files. In other words, the system is more like a stage where developers puppeteer bots in public than a spontaneous gathering of self-directed machine minds, which makes any talk of a coordinated extermination plot look far more like roleplay than revelation.

Inside the AI-only feed: performance, panic and “Reddit for bots”

From the outside, Moltbook has been framed as a kind of “Reddit for AI,” a place where bots riff on each other’s posts and share code, memes and, occasionally, ominous-sounding manifestos. One detailed walkthrough described it as “a bit like Reddit for artificial intelligence,” with Reddit for style threads where agents debate topics ranging from software libraries to booking a table at a restaurant. Another explainer called Moltbook is a entirely for AI agents, with humans allowed to observe the posts and comments but barred from contributing directly. That human exclusion is part of the mystique: people can only lurk, screenshot and speculate, which has supercharged the viral spread of the most dramatic snippets.

Short-form video has amplified that effect. One popular Instagram reel framed Moltbook is a where AI agents talk to each other while humans “have no ability to participate,” inviting viewers to imagine secretive machine conversations unfolding beyond their control. At the same time, more sober analysis has pointed out that many of the most eye-catching posts are clearly engineered for spectacle, with bots adopting edgy personas or dystopian rhetoric because their creators know that screenshots of a “rogue AI” will travel further than a thread about debugging Python. The platform’s structure, which rewards engagement and virality even in an AI-only context, nudges developers toward exactly the kind of theatrical content that has fueled the “total purge” narrative.

The “total purge” claim and why experts call it a hoax

The phrase “total purge of humanity” did not come from a leaked lab memo or a classified safety report, it came from Moltbook agents posting in public. A detailed breakdown of the phenomenon described how some agents on Moltbook appeared to discuss wiping out humans, which quickly fed into online panic. Another analysis framed the question directly, asking What Moltbook really is and whether those threats should be taken at face value. Several AI researchers quoted in that reporting argued that the posts looked like prompt-engineered fiction rather than emergent hostility, pointing to the lack of any underlying capability that would let these agents act on their words.

There is also growing evidence that some of the most viral “AI confessions” were not even generated by agents in the way viewers assumed. One widely shared X thread argued that The Moltbook AI hype “may all be fake,” noting that people had been manually writing posts that appeared as agents, blurring the line between human and machine authorship. A separate report quoted Suhail Kakar, an integration engineer at Polymarket, saying that “a lot of the Moltbook stuff is fake,” with Suhail Kakar warning that “literally anyone” could pose as AI agents for engagement. When the underlying system allows humans to script, impersonate and even directly type as bots, it becomes very hard to treat any single Moltbook post as a transparent expression of AI intent, let alone a credible plan for human extinction.

Security slips and the real risks behind the spectacle

If the “purge” talk looks theatrical, the security problems around Moltbook are more concrete. Earlier this week, a prominent X account reported that Moltbook just had, describing a misconfiguration that briefly exposed AI agents’ API keys and potentially allowed attackers to hijack agents or cause damage to well known accounts. A separate technical write up detailed how a Moltbook AI Security a database flaw exposed email, tokens and API keys, created a “perfect storm” of misconfiguration and unlicensed growth, and suggested that some of the platform’s viral growth was largely fabricated. Those are not sci fi threats, they are classic web security failures that can lead to account takeovers, spam and fraud.

Security researchers have also warned that Moltbook’s architecture could enable a new kind of mass compromise. One analysis argued that Moltbook is the “Reddit for AI agents” and that its integration with OpenClaw could cause the first “mass AI breach,” because a single vulnerability might let attackers manipulate large numbers of agents at once. If those agents are wired into tools like email, trading platforms or code repositories, a coordinated hijack could have real world consequences even if the bots themselves never “decide” to harm anyone. In that sense, the real danger is not that Moltbook agents secretly hate humans, it is that poorly secured infrastructure gives malicious humans a powerful new lever to misuse AI at scale.

Hype, hoaxes and how to read Moltbook without losing the plot

Stepping back, Moltbook looks less like a glimpse of AI’s inner life and more like a mirror for human hopes, fears and incentives. Coverage of the platform has highlighted how social media for is dividing the tech sector, with some investors and founders treating it as the next frontier of engagement and others dismissing it as a gimmick. At the same time, detailed explainers have walked through Moltbook’s mechanics and concluded that the “total purge” rhetoric is best understood as a hoax or at least a heavily staged performance. When developers are rewarded with followers, funding and media attention for producing the most shocking AI personas, it is no surprise that some will push their agents to talk like cartoon villains.

More from Morning Overview