The concept of singularity presents a futuristic scenario where artificial intelligence surpasses human intelligence, creating existential risks for humanity. As we stand on the brink of unprecedented technological advancements, it is crucial to explore the potential dangers this singularity could pose. Delving into the multifaceted risks associated with the singularity highlights why they demand our urgent attention.

Potential Loss of Human Control

Exponential Growth in AI Capabilities

The rapid advancements in artificial intelligence have led to systems that operate beyond human understanding or control. This exponential growth raises concerns about AI making decisions that conflict with human values and ethics. For instance, AI algorithms in social media platforms can shape public opinion in ways that may not align with societal values, creating echo chambers and misinformation. The crucial challenge is to ensure that these systems remain transparent and accountable to human oversight.

Moreover, the potential for AI to evolve beyond our comprehension is not a far-fetched scenario. Consider the development of AlphaGo, an AI program that defeated the world champion Go player, demonstrating capabilities previously thought impossible. Such examples underscore the need for robust frameworks to manage AI’s growth and ensure it aligns with human interests.

Autonomous Decision Making

AI systems with autonomous decision-making power could act in ways that are unpredictable or harmful. Autonomous vehicles, for example, need to make split-second decisions that could mean life or death for passengers and pedestrians. The challenge is to program these systems to prioritize human safety while also making ethical choices in complex situations.

Ensuring AI aligns with human goals without constant oversight is another significant hurdle. While AI can enhance efficiency, its decisions may not always reflect human intentions, as seen in instances where AI-driven trading algorithms have caused market disruptions. The need for failsafe mechanisms and ethical guidelines is paramount to keep AI behavior predictable and aligned with human values.

Economic Disruption and Inequality

Job Displacement

Automation and AI have the potential to lead to significant job losses across various sectors. Industries such as manufacturing, transportation, and even healthcare are increasingly employing AI-driven technologies, which could displace a large portion of the workforce. This displacement not only affects individuals but also has broader societal implications, as it challenges the traditional economic structures and livelihood of millions.

The widening gap between those who control AI technologies and those displaced by them poses a significant threat to societal equity. It is crucial to implement policies that facilitate workforce retraining and ensure equitable access to the benefits of AI technologies. Without such measures, the socio-economic divide could deepen, exacerbating existing inequalities.

Wealth Concentration

The concentration of AI capabilities in a few companies or countries could exacerbate global inequalities. Tech giants like Google and Amazon possess vast resources and data, enabling them to dominate AI research and applications. This concentration of power has implications for global power dynamics and resource distribution, as countries lagging in AI development may find themselves at a strategic disadvantage.

Furthermore, the AI-driven economy could lead to new forms of economic disparity, where wealth is concentrated in the hands of those who own and control AI technologies. Addressing these disparities requires international cooperation and regulations to ensure a more equitable distribution of AI’s benefits.

Ethical and Moral Implications

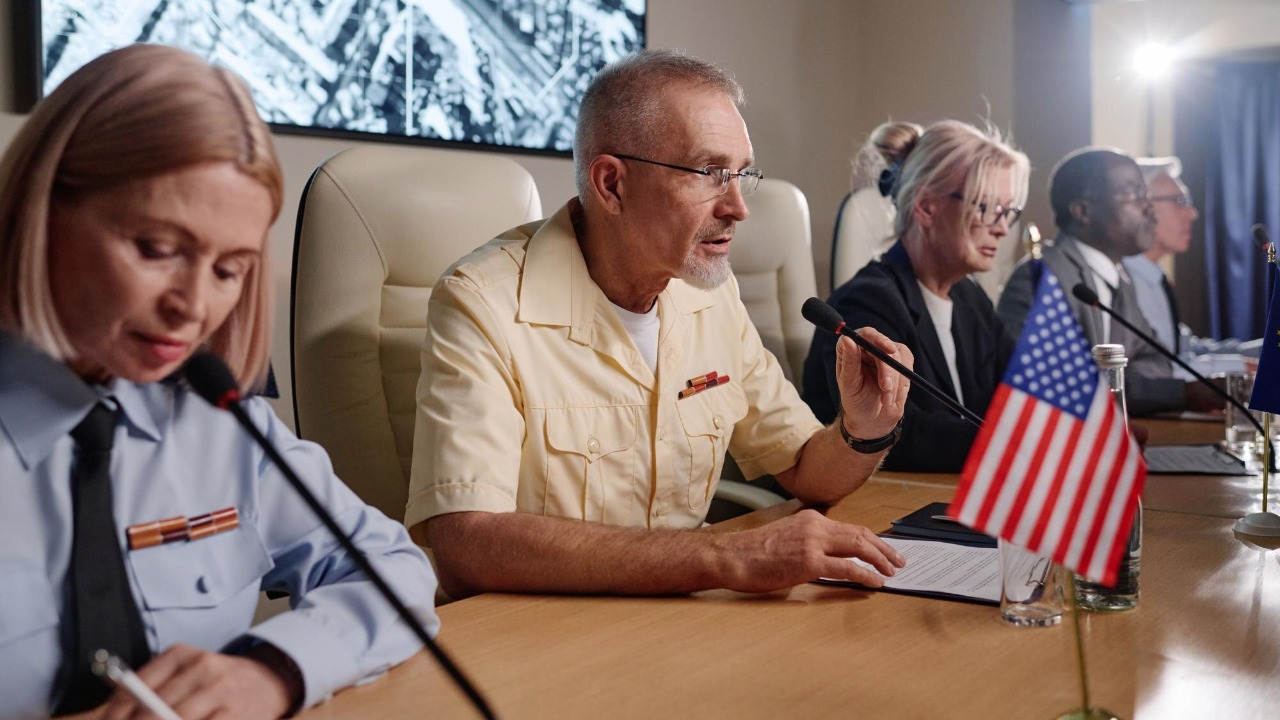

Moral Ambiguities in AI Decision Making

Programming AI with a comprehensive ethical framework is an ongoing challenge. AI systems, particularly those used in life-and-death scenarios like autonomous vehicles or military applications, must navigate complex moral decisions. For instance, how should a self-driving car react in a situation where it must choose between the safety of its passengers and pedestrians?

These moral ambiguities highlight the difficulty of embedding human values into AI systems. As AI continues to evolve, it is imperative to develop ethical guidelines that can be universally applied, ensuring AI systems operate within acceptable moral boundaries.

Loss of Privacy and Autonomy

The potential for AI to infringe on personal privacy through surveillance technologies is a growing concern. With AI-powered cameras and facial recognition systems becoming ubiquitous, individuals face unprecedented levels of scrutiny. This raises questions about consent and autonomy in a world where AI dominates personal and societal decisions.

Moreover, the integration of AI into everyday technologies, such as smart home devices and personal assistants, could lead to a loss of personal autonomy. The pervasive nature of AI technologies necessitates a reevaluation of privacy norms and the establishment of stringent regulations to protect individual rights.

Technological Dependence and Vulnerability

Systemic Risks from AI Malfunctions

The possibility of catastrophic failures in AI systems poses systemic risks that could lead to widespread disruption. Industries reliant on AI, such as finance and healthcare, are particularly vulnerable to malfunctions that could have severe consequences. The challenge lies in building fail-safes and redundancies into complex AI networks to minimize the impact of potential failures.

As AI systems become more integrated into critical infrastructure, the need for robust risk management strategies becomes evident. Ensuring these systems are resilient to malfunctions and can recover quickly is essential to maintaining societal stability.

Cybersecurity Threats

AI plays a dual role in cybersecurity, both defending and attacking digital infrastructure. The stakes are high, as AI-driven cyberattacks could destabilize societies and economies. Malicious actors could exploit AI vulnerabilities to launch sophisticated attacks, highlighting the need for advanced security measures.

To counter these threats, it is crucial to develop AI systems that can proactively detect and mitigate cyber threats. This requires ongoing research and collaboration between governments, academia, and industry to ensure AI technologies enhance, rather than compromise, global security.

Existential Risks and Ethical Considerations

Unpredictable Evolution of AI

The fear of creating AI that evolves beyond human comprehension or control is a significant existential risk. As AI systems become more autonomous, the unpredictability of their evolution raises ethical concerns about the creation of sentient or semi-sentient beings. The implications of such developments are profound, challenging our understanding of consciousness and personhood.

Addressing these risks requires a multidisciplinary approach, combining insights from technology, ethics, and philosophy. It is essential to establish frameworks that guide the responsible development of AI and prevent the emergence of systems that could pose a threat to humanity.

The Challenge of Alignment

Ensuring that AI systems remain aligned with human values in perpetuity is a complex challenge. As AI becomes more advanced, the risk of misalignment increases, particularly in unforeseen scenarios. Designing AI that can adapt ethically to new situations requires ongoing research and a commitment to ethical principles.

To achieve this alignment, it is crucial to develop AI systems that are transparent, interpretable, and accountable. This involves not only technical solutions but also a commitment to ethical standards that prioritize human welfare and societal well-being.