Nvidia is not waiting for the current AI boom to cool before moving on to its next act. As investors and rivals parse the limits of today’s Blackwell generation, the company is already telling customers that its Rubin era is not just on the roadmap but physically emerging from factories. The message is blunt: the next wave of chips is not a distant promise, it is already rolling off production lines and being wired into full systems.

That aggressive cadence is reshaping expectations for how quickly AI infrastructure will evolve, from hyperscale data centers to autonomous vehicles. It also raises a sharper question for the rest of the industry: if Nvidia can keep overlapping generations like this, everyone else is going to be chasing a moving target rather than a stable product cycle.

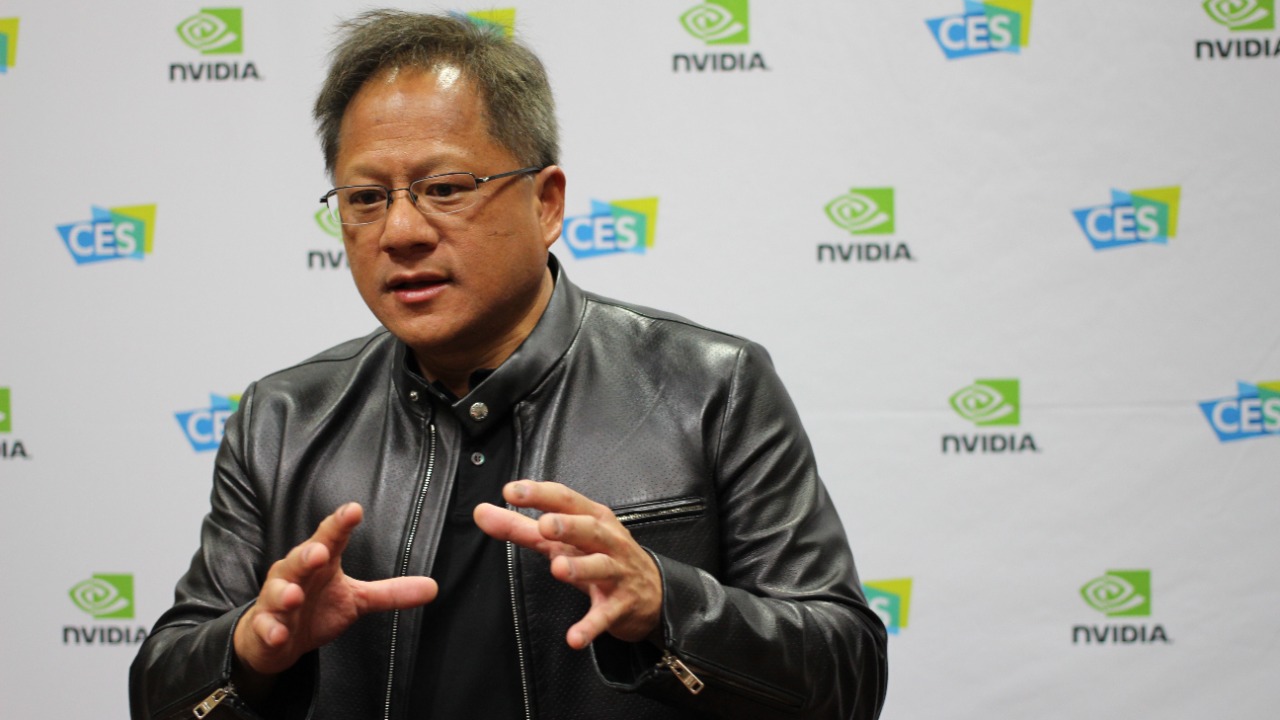

Huang signals Rubin is already in “full production”

Nvidia CEO Jensen Huang has started the year by telling customers and investors that the company’s next generation of chips is not theoretical, it is in what he called “full production.” In a recent appearance, Nvidia CEO Jensen Huang said the company’s next generation of chips is in full production and emphasized that this new wave covers both its AI accelerators and a fresh line of central processors, positioning Rubin as a broad data center platform rather than a single chip family, according to Nvidia CEO Jensen Huang. By framing Rubin this way, he is signaling that Nvidia intends to control not just the GPU socket but the full compute stack that trains and serves modern AI models.

That “full production” language matters because it suggests Nvidia has moved past engineering samples and limited pilot runs into volume manufacturing, even as many customers are still ramping Blackwell deployments. In practice, that means cloud providers and large AI labs can begin planning capacity on the assumption that Rubin hardware will be available in meaningful quantities rather than as a constrained early-access product. It also underlines how Nvidia is trying to smooth the transition between generations so that customers do not face long gaps or painful migrations between architectures.

Vera Rubin NVL72: a supercomputer built around the new chips

Nvidia is not just shipping chips, it is packaging Rubin into turnkey systems that look more like industrial equipment than traditional servers. At CES, the company introduced the Vera Rubin NVL72 AI supercomputer, a dense rack-scale system that Nvidia says can train mixture-of-experts models with only a quarter of the GPUs previously required with Blackwell, while also delivering up to five times greater inference performance and as much as ten times lower cost per token than the Blackwell generation, according to Vera Rubin NVL72. By tying Rubin to a named supercomputer line, Nvidia is making clear that it wants to sell complete AI “factories,” not just components.

Those performance and cost claims are aimed squarely at the economics of large language models, where the number of tokens processed per dollar has become a key metric for both startups and incumbents. If a Rubin-based NVL72 can truly cut GPU counts by three quarters for certain workloads, that changes how companies think about data center footprints, power budgets, and even which regions can host advanced AI clusters. It also gives Nvidia a narrative that Rubin is not just faster, it is structurally cheaper to operate at scale, which is exactly the pitch hyperscalers want to hear as they confront soaring AI energy bills.

Rubin AI lineup: three years in the making and ahead of schedule

Behind the marketing names sits a Rubin AI lineup that Nvidia has been quietly building for years. Reporting on the program notes that NVIDIA’s Rubin AI Lineup Is Under Full Production, As It Has Been In Works For Three Years Now, underscoring that this is not a rushed response to the latest AI hype cycle but a long-planned successor to Blackwell, according to Rubin AI Lineup Is Under Full Production, As It Has Been In Works For Three Years Now. The Rubin AI platform is described as a family of parts that will span accelerators, networking, and supporting silicon, giving Nvidia more levers to tune performance and cost for different customers.

That long gestation period helps explain how Nvidia can claim Rubin is entering full production “well ahead of schedule” relative to earlier public roadmaps. It suggests the company has been running overlapping design and manufacturing ramps, with Rubin development starting while Blackwell was still being finalized. For customers, that overlapping cadence reduces the risk that a bet on Nvidia’s current generation will leave them stranded when the next one arrives, since the company can offer a clearer path to mix and match Rubin systems alongside existing deployments.

Memory moves from afterthought to centerpiece

One of the most striking shifts with Rubin is how Nvidia talks about memory. In earlier GPU generations, memory capacity and bandwidth often felt like constraints bolted onto the side of compute, but with Rubin the company is explicitly treating memory as a first-class design axis. Coverage of the launch notes that the Nvidia New Rubin Platform Shows Memory Is No Longer, Afterthought in AI, highlighting how the architecture is built around feeding massive models with enough high-speed memory to keep the cores busy, according to Nvidia New Rubin Platform Shows Memory Is No Longer. That shift reflects the reality that state-of-the-art models are increasingly bottlenecked by data movement rather than raw floating point throughput.

By foregrounding memory, Nvidia is also responding to a competitive landscape where alternative architectures, from custom ASICs to novel interconnects, have tried to differentiate on how efficiently they move and store data. Rubin’s design, which pairs high-bandwidth memory with advanced networking and system-level integration, is meant to reassure customers that they will not have to choose between capacity and speed as models grow. It also hints at a future where AI hardware is judged as much on how it handles terabytes of parameters and context windows as on its headline teraFLOP numbers.

“A New Engine for Intelligence” and the Vera Rubin namesake

Nvidia is wrapping Rubin in a story that is as much about branding as it is about silicon. In its own framing, the company describes Rubin as A New Engine for Intelligence, presenting The Rubin Platform Introducing the audience to pioneering American astronomer Vera Rubin as both a technical milestone and a tribute to a scientist who helped reveal the existence of dark matter, according to New Engine for Intelligence. By naming the platform after an American Vera Rubin, Nvidia is aligning its AI roadmap with a broader narrative about exploring unseen structures, in this case the hidden patterns in data rather than galaxies.

That storytelling is not just cosmetic. It helps Nvidia position Rubin as a foundational layer for everything from generative models to autonomous systems, much as Vera Rubin’s work underpins modern cosmology. The company’s description of the platform emphasizes not only compute and memory but also Ethernet Photonics scale out networking, signaling that Rubin is meant to be the connective tissue of vast AI clusters rather than a standalone chip. For customers deciding where to place multi-billion-dollar bets on infrastructure, that kind of cohesive narrative can be as persuasive as any benchmark chart.

Investors press for details on the Rubin ramp

While Nvidia courts developers with performance claims, investors are focused on how quickly Rubin can translate into revenue. In a recent investor update, the company described Vera Rubin as being in Full production and fielded questions about timing and ramp dynamics from Bank of America analyst Vivek Arya, who pressed Nvidia on how the new platform would affect utilization and downtime in large AI factories, according to Vera Rubin. That exchange underscores how central Rubin has become to expectations about Nvidia’s growth trajectory over the next several years.

From my perspective, the key investor question is not whether Rubin will sell, but how it will coexist with Blackwell in the product stack. If Rubin systems deliver significantly better cost per token and energy efficiency, customers may push to accelerate their transitions, which could compress the effective lifespan of Blackwell deployments. On the other hand, a carefully managed ramp, with Rubin initially targeting the most demanding workloads while Blackwell serves more mature applications, could allow Nvidia to sustain high utilization across both generations and smooth out revenue volatility.

Market reaction: stock jitters meet production confidence

Financial markets have already started to react to Nvidia’s Rubin messaging, even if the signals are mixed. Reporting from the trading floor noted that Nvidia briefly pares losses after Jensen Huang says Vera Rubin chips are in full production, with NvidiaNVDA trading at $188.97 and moving 1.24% as investors digested the news that the next generation is already being manufactured for hyperscalers and everyone else who wants them, according to Nvidia briefly pares losses. The fact that the stock only briefly pared losses suggests that, for now, macro concerns and broader tech valuations are tugging against the bullish Rubin story.

Still, I read that intraday move as a sign that the market is starting to price Rubin as more than a distant roadmap item. When a CEO’s comment about “full production” can shift a mega-cap stock’s trajectory, even temporarily, it shows how tightly Nvidia’s valuation is tied to its ability to stay ahead in AI hardware. As more concrete Rubin revenue shows up in earnings, those short-term jitters are likely to give way to a clearer picture of how much incremental demand the new platform is unlocking versus simply cannibalizing Blackwell.

Rubin’s role in speeding AI and reshaping data centers

Beyond stock charts, Rubin is poised to change how AI workloads are architected inside data centers. Coverage of Nvidia Corp’s plans notes that Nvidia Corp’s highly anticipated new Rubin data center products are nearing release this year and are expected to help speed AI by offering better performance and efficiency than the component that it is replacing, according to Nvidia CEO says new Rubin chips are on track. That framing makes clear that Rubin is not just a spec bump, it is intended as a generational leap in how quickly and cheaply models can be trained and served.

For cloud providers, that could translate into more granular service tiers, where Rubin-backed instances are reserved for the most latency-sensitive or compute-intensive applications, such as real-time translation or multi-agent simulations. Enterprises, meanwhile, may see Rubin as the first platform that makes it economically viable to run large models in-house rather than relying entirely on external APIs. If Rubin can deliver on its promise of higher throughput per watt and per dollar, it will accelerate the trend toward AI-native data centers where traditional CPU-heavy racks give way to tightly integrated accelerator pods.

CES spotlight: Vera Rubin chips and the early arrival narrative

The timing of Rubin’s debut has also become part of Nvidia’s competitive story. At CES, live coverage highlighted that Nvidia’s Vera Rubin Chips Will Arrive Later This Year Nvidia, with the company positioning Vera Rubin as its next generation AI superchip platform that is on track to reach customers sooner than many expected, according to Vera Rubin Chips Will Arrive Later This Year Nvidia. That public commitment at a high-profile consumer tech show signals confidence that the supply chain and manufacturing partners are ready to support a broad rollout.

Separate reporting reinforces that narrative by noting that the early debut comes months ahead of the late 2026 timeline Nvidia had previously projected, with the company saying the platform, Named after astronomer Vera Rubin, is now expected to be available in the second half of 2026, according to Named. Pulling that schedule forward gives Nvidia a head start in locking in long-term supply agreements and design wins, particularly with customers that are planning multi-year AI roadmaps and want assurance that their chosen platform will not slip.

What Rubin’s fast ramp means for the AI race

Stepping back, I see Nvidia’s Rubin push as a signal that the AI hardware race is entering a new phase where generational turnover will be measured in a handful of years, not the longer cycles that once defined GPUs. By telling the world that Rubin is already in full production while Blackwell is still ramping, Nvidia is effectively compressing its own roadmap and daring competitors to keep up. That strategy could lock in customers who fear being stranded on slower platforms, but it also raises the bar for Nvidia to deliver stable software and tooling that can span overlapping generations without creating chaos for developers.

For the broader ecosystem, Rubin’s arrival will likely accelerate consolidation around a few dominant hardware and software stacks, since only the largest players can afford to match this pace of innovation. Startups building AI infrastructure will have to decide whether to align tightly with Rubin’s capabilities or differentiate in niches that Nvidia’s general-purpose approach does not fully address. Either way, the fact that Nvidia’s next wave of chips is already rolling off lines means the AI industry is about to be reshaped again, just as it was starting to digest the last revolution.

More from Morning Overview