Advanced Micro Devices (AMD) is reportedly in advanced talks with OpenAI to supply its AI chips, a move that could challenge Nvidia’s dominance in the artificial intelligence hardware market. This potential deal underscores AMD’s strategic push into AI, leveraging its Instinct MI300X accelerators to capture demand from major players like OpenAI, which has relied heavily on Nvidia’s products. The development comes amid intensifying competition in the AI chip sector, where AMD aims to erode Nvidia’s market share through performance improvements and cost advantages.12.

AMD’s Historical Position in Semiconductors

Founded in 1969, AMD started as a fabless chip designer, competing with Intel in the CPU market. However, the company was overshadowed in the GPU market by Nvidia’s CUDA ecosystem1. The 2010s were particularly challenging for AMD, as it lost significant market share due to manufacturing delays and Nvidia’s lead in AI-specific architectures. At one point, Nvidia controlled 80-90% of AI GPU shipments2.

However, the company underwent a significant internal restructuring under CEO Lisa Su starting in 2014. This new strategy emphasized custom chip designs and partnerships with Taiwan Semiconductor Manufacturing Company (TSMC) for advanced nodes, setting the stage for AMD’s resurgence in the semiconductor industry.

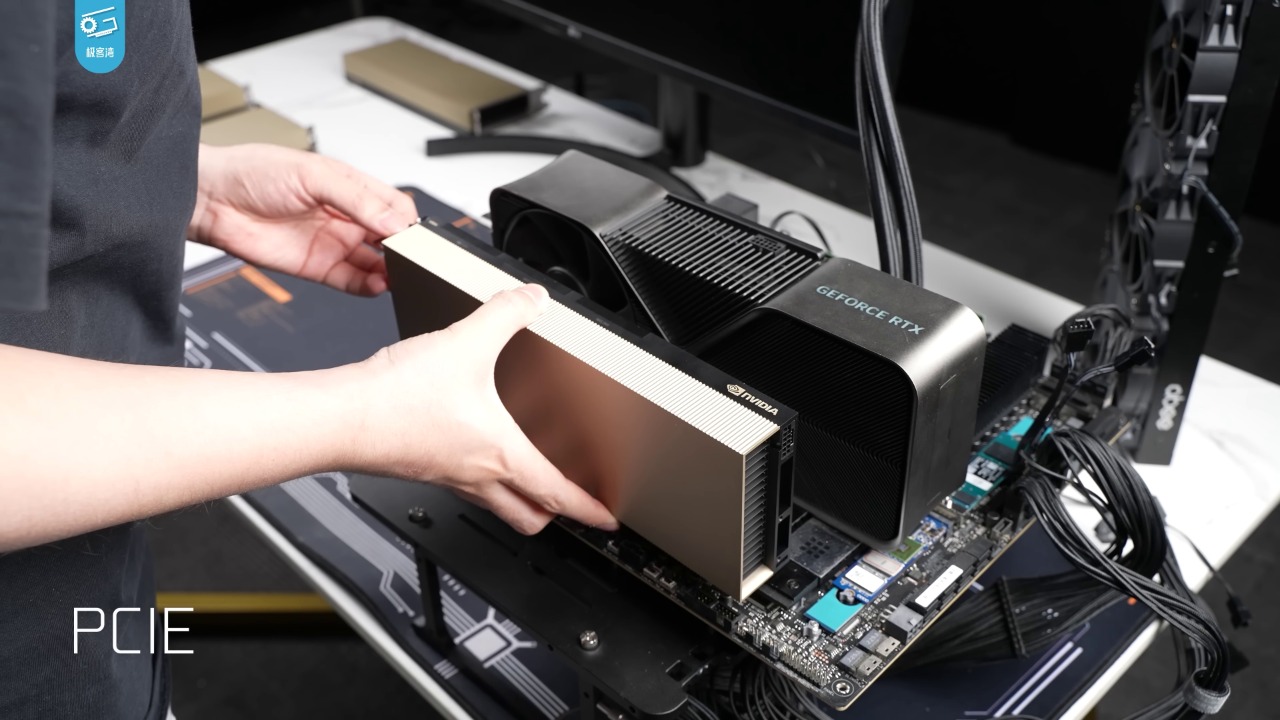

Technological Leap with Instinct Series

In 2023, AMD launched its MI300X AI accelerator, which offers competitive performance to Nvidia’s H100. The MI300X boasts up to 2.5x more memory bandwidth using the CDNA 3 architecture1. Benchmarks show the MI300X’s efficiency in large language model training, with third-party tests indicating parity in tokens-per-second metrics against Nvidia equivalents2.

AMD has also made significant improvements to its software ecosystem. Updates to the ROCm platform have positioned it as a viable alternative to Nvidia’s CUDA, enabling broader adoption by AI developers.

Strategic Partnerships Fueling Growth

AMD’s growth in the AI chip market is also being fueled by strategic partnerships. The company is in advanced talks with OpenAI to supply MI300X chips, potentially starting with pilot deployments in 2024. This would help diversify OpenAI’s reliance on Nvidia, which currently supplies over 80% of its compute needs1.

Existing deals, such as Microsoft Azure’s integration of AMD Instinct accelerators for AI workloads, are also contributing to AMD’s growth. These partnerships are expected to contribute to AMD’s projected $4 billion in AI chip revenue for 2024. Furthermore, collaborations with hyperscalers like Oracle and Meta, where AMD’s chips are used in custom AI training clusters, provide evidence of ecosystem expansion.

Navigating Supply Chain and Manufacturing Edges

AMD relies on TSMC for the 5nm and 4nm production of its MI300 series. This is similar to Nvidia’s dependencies, but AMD has been able to achieve faster ramp-up times due to diversified fab allocations2. In terms of pricing, the MI300X is offered at around $10,000-$15,000 per unit, significantly lower than Nvidia’s H100, which is priced at over $30,000. This pricing strategy enables cost-sensitive AI deployments1.

AMD also has to navigate supply constraints, including U.S. export controls on AI chips to China. However, AMD’s global footprint mitigates risks compared to Nvidia’s heavier exposure.

Market Impact and Competitive Dynamics

AMD’s market share in AI GPUs has been rising, from under 5% in 2022 to an estimated 10-15% in 2024. This growth has been driven by the talks with OpenAI and broader industry shifts2. Nvidia has responded with price cuts on its H100 and accelerated launches of its Blackwell products. AMD, in turn, has countered with its MI325X roadmap for 2024 deliveries.

Investor reactions to these developments have been positive. Following reports of the potential deal with OpenAI, AMD’s stock surged by 5%, signaling confidence in its pivot towards AI.

Future Roadmap and Industry Shifts

Looking ahead, AMD has plans for its upcoming MI400 series, which is expected to be launched in 2025. The new series promises 4x performance gains over the MI300 through chiplet designs and HBM4 memory integration1. The broader AI chip wars are also heating up, with potential OpenAI commitments for thousands of AMD GPUs, which could put pressure on Nvidia’s $100 billion+ annual revenue from AI2.

Regulatory and ethical considerations are also playing a role in the industry’s evolution. For instance, energy efficiency gains from AMD’s designs could reduce AI data center power demands by up to 30%, addressing environmental concerns associated with AI development.