Deepfake technology has crossed a threshold in 2025, shifting from clumsy curiosities to tools that can convincingly mimic faces, voices and even personalities in real time. The result is a new class of fraud, psychological manipulation and identity abuse that is already straining legal systems, corporate defenses and basic social trust. The next wave, driven by automation and integration into everyday apps, is poised to be even more destabilizing than what we have seen so far.

What makes this moment different is not just better visuals, but the scale, speed and targeting of synthetic media. Deepfakes are now cheap to generate, hard to spot and increasingly woven into scams, political operations and even entertainment, blurring the line between creative use and weaponized deception. I see 2025 as the year deepfakes stopped being a niche security concern and became a mainstream societal risk.

From novelty clips to a full-blown cybercrime toolset

Only a few years ago, deepfakes were mostly viral oddities, swapping celebrity faces into movie scenes or meme videos. In 2025, they have become a core part of the cybercrime toolkit, used to impersonate executives, hijack customer support channels and bypass identity checks. Security analysts now describe AI-generated media as one of the most pressing digital threats, with In the last few years showing how quickly the boundary between real and fake is blurring.

The numbers behind this shift are stark. Industry trackers report that Deepfake Fraud files have exploded from 500K in 2023 to 8M in 2025, while related fraud attempts spiked by 3,000% in 2023, with 1,740 documented incidents feeding into a much larger underground economy. Another dataset projects deepfake files reaching 8 million this year, up from 500,000 only two years ago, and warns that Human detection of synthetic content is already lagging behind machine generation. The trajectory is clear: deepfakes are no longer a fringe experiment, they are industrialized.

2025’s “peak deepfake season” and the fraud boom

Several security firms now describe 2025 as a tipping point for AI-enabled scams, with synthetic voices and faces stitched into phishing, social engineering and financial fraud. One analysis bluntly labels this year Why Is Peak Deepfake Season, pointing to remote work habits, constant social media posting and the spread of voice notes as the raw material for impersonation. Fraudsters no longer need to guess how you sound or look; they can scrape your Instagram Reels and Zoom recordings and let generative models do the rest.

That shift is already visible in the fraud data. One cyber risk report notes that phishing campaigns have moved From Awkward Emails to Hyper Realistic Lures, with Explosive Volume in which Phish volumes in early 2025 outpaced all of 2024 by 19 percent. Another forecast, framed as a 2025 Prediction, warns that The Rise Of AI Generated Deepfake Attacks Will Escalate In and Will Continue To Target High profile individuals, especially executives and wealthy families whose public appearances provide rich training data. The fraud boom is not hypothetical, it is already hitting inboxes and bank accounts.

Real-time deepfakes: the next phase of the threat

The most unsettling development in 2025 is the move from pre-recorded fakes to real-time manipulation. Instead of crafting a video over hours, attackers can now join a live video call with a synthetic face and voice that track their movements, letting them improvise in conversation. Researchers argue that Deepfakes are clearly heading toward a future that is real time, which will make them easier for criminals to deploy and harder for automated detection systems to flag before damage is done.

Some of that future has already arrived. Identity verification platforms report that in 2025, AI-powered scams are evolving so quickly that In 2025 1 in 20 ID verification failures is now linked to deepfakes, with Fraudsters using live face-swapping tools to trick selfie checks and liveness tests. Another technical review notes that, Undoubtedly, the field is progressing so fast that synthetic material can now be produced autonomously, without any human oversight, using recycled generative adversarial networks, a trend captured in a recent Undoubtedly assessment. When deepfake generation no longer needs a skilled operator, the barrier to real-time abuse drops even further.

CEO fraud, AI-enabled heists and the new corporate risk

Corporate security teams are already confronting what used to sound like science fiction: a chief financial officer receiving a video call from a “CEO” who looks and sounds perfect, instructing them to wire funds urgently. Reports of CEO fraud, but this time with perfect replicas, have surged over the past year, with attackers exploiting visual and vocal cues that override employee skepticism. In these scenarios, traditional training that tells staff to “trust your gut” is almost useless, because the fake executive triggers all the subconscious signals people associate with authority.

Financial crime specialists are also tracking AI-enabled heists that blend deepfakes with more familiar social engineering. One case study describes how a group used deepfake face-changing software to impersonate a company representative and divert nearly Emerging Threats of AI Enabled Fraud worth nearly $30 million in funds, highlighting how quickly synthetic media can translate into real-world losses. Another cyber risk analysis notes that deepfakes and voice cloning are now a standard part of high value phishing kits, with attackers using them to add credibility to emails and calls that request sensitive data or payments.

Psychological warfare and the “1.4 Societal impact” of deepfakes

The danger is not limited to bank accounts. Deepfakes are increasingly used as tools of psychological warfare, aimed at activists, minorities and political opponents. Analysts warn that we are likely to see more large-scale AI-enabled psychological operations that leverage synthetic videos not only for disinformation, but for targeted manipulation through persuasive narratives, a pattern already visible in a deepfake video of Hong Kong activists examined by tailored psychological warfare researchers. These operations do not just mislead; they aim to intimidate, demoralize and fracture communities.

Academic work is starting to quantify the broader fallout. A recent scoping review on the negative effects of synthetic media on the human mind includes a section labeled 1.4 Societal impact of deepfakes, which argues that Deepfakes have been recognized as a serious threat to society by various national security bodies and the European Parliament. The authors warn that repeated exposure to manipulated content can erode trust in authentic media, fuel conspiracy thinking and make it easier for bad actors to dismiss real evidence as “fake,” a phenomenon sometimes called the “liar’s dividend.” In other words, even when a deepfake is debunked, it leaves a residue of doubt that weakens shared reality.

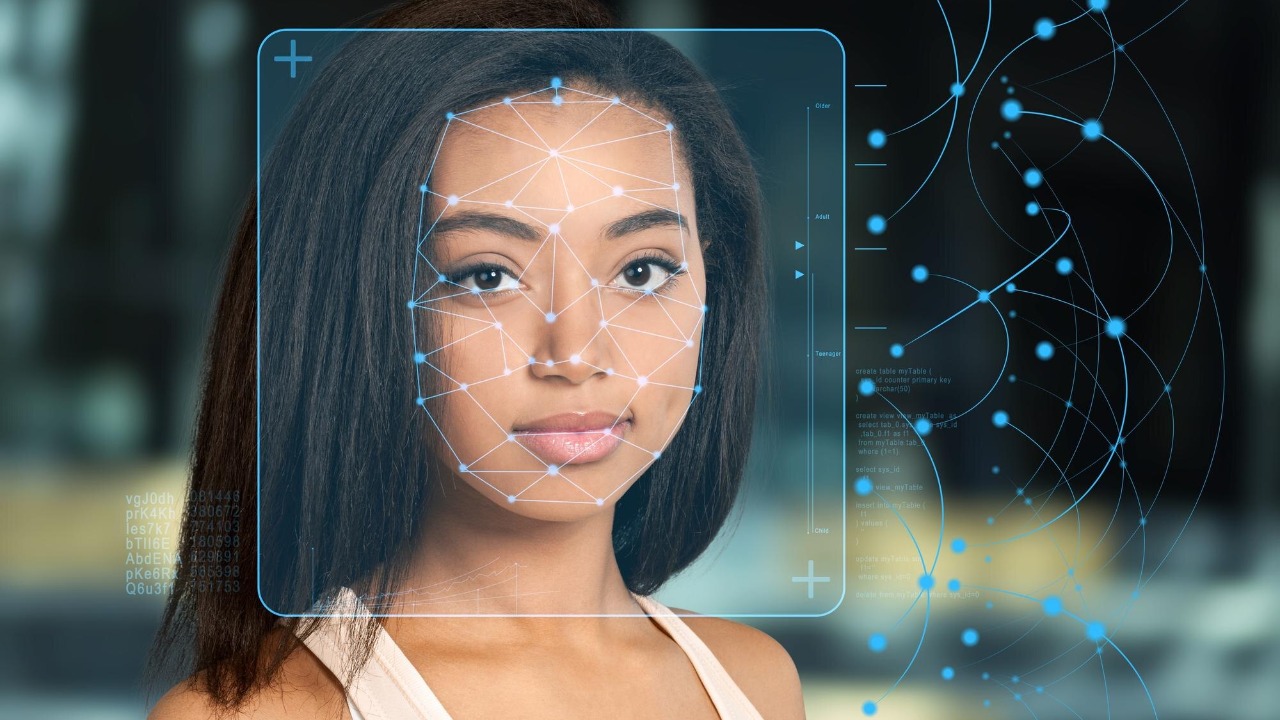

Biometrics, digital ID and the arms race to verify “real”

As deepfakes get better, the systems we use to prove who we are are coming under strain. Banks, telecoms and governments have spent years rolling out biometric logins and digital IDs that rely on face scans, voiceprints and video selfies. Now, with 1 in 20 failed ID checks tied to synthetic media and fraudsters actively probing these defenses, the industry is scrambling to harden its verification pipelines against AI-driven attacks. One identity provider notes that Fraudsters are now testing real-time face swaps, replay attacks and voice cloning in a continuous cat and mouse game.

Policy and industry leaders are starting to accept that no single company or country can solve this alone. A recent briefing on securing biometrics and digital ID argues that, heading into 2026, there is a general consensus that More collaboration is needed and that There will have to be shared standards, data sharing and industry collaboration ultimately gaining ground if biometric systems are to withstand synthetic attacks. That means common protocols for liveness detection, watermarking of legitimate content and perhaps even legal requirements for platforms to label AI-generated media at the point of creation.

Not all deepfakes are malicious: the strange upside

Amid the alarm, it is important to recognize that the same tools used for fraud can also enable powerful, even moving, creative work. One widely discussed example is the use of AI to restore the singing voice of country star Randy Travis the, who lost his ability to perform after a stroke; synthetic modeling of his past recordings allowed him to share his talent with the world once again. In entertainment, advertising and education, synthetic media is opening new possibilities for dubbing, accessibility and historical reconstruction that would have been impossible a decade ago.

Industry observers like Ingrid Constant, who tracks Deepfake Trends to Watch, note that Jul is seeing a wave of new types of synthetic videos that are explicitly consent based, from virtual influencers to AI-generated training avatars. These applications highlight a core tension: the technology itself is neutral, but its impact depends on governance, consent and context. The challenge for regulators and platforms is to encourage beneficial uses while constraining the most harmful ones, without resorting to blunt bans that could stifle innovation.

How 2025’s leap sets up an even scarier next wave

Experts who study synthetic media argue that 2025 is not a plateau, but a launchpad. One detailed analysis by Deepfakes researcher Siwei Lyu at the University at Buffalo, published on a Fri in late December, concludes that deepfakes leveled up in 2025 and that the next phase will be defined by seamless integration into everyday communication tools. Instead of needing a separate app, users (and attackers) will be able to toggle synthetic filters inside mainstream platforms like WhatsApp, Zoom or TikTok, making it harder for victims to realize when they have crossed from authentic to artificial.

Looking ahead, I expect three trends to define the “scarier” wave. First, automation will reduce the cost and skill required to generate convincing fakes, as highlighted by the Undoubtedly finding that content can now be produced without human oversight using recycled GANs. Second, personalization will intensify, with models trained on an individual’s digital footprint to craft bespoke scams or smear campaigns. Third, detection will become a probabilistic game, where no tool can offer absolute certainty, forcing societies to adapt to a world where visual and audio evidence is always a little suspect. That erosion of certainty, more than any single scam, may be the deepest cut of the deepfake era.

More from MorningOverview