As AI-powered speakers gain more traction in households and workspaces, issues regarding their capability to record and transmit personal conversations continue to multiply. This is a noteworthy exploration, as it raises significant questions about privacy and ethical considerations.

Overview of AI Speakers and Their Capabilities

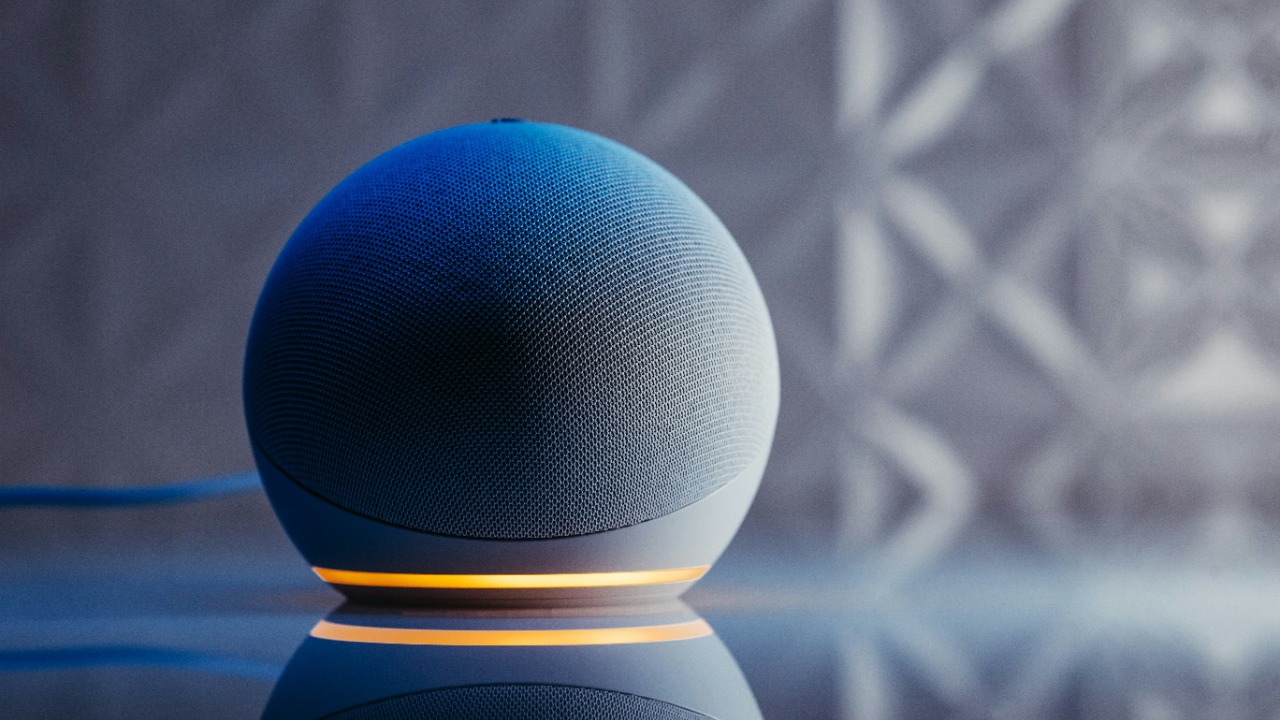

AI speakers, like Amazon’s Echo and Google Home, are smart devices that use voice recognition to perform various tasks. These tasks range from simple functions such as playing music and setting reminders to more sophisticated actions like controlling smart home devices. The power behind these speakers lies in their ability to interpret and respond to human speech, thanks to advancements in artificial intelligence.

However, as these devices become smarter, they also pose potential risks in terms of privacy and information security. A report in Fortune revealed that these AI speakers are always listening, potentially recording sensitive information discussed in their vicinity. The data collected by these devices is massive, raising serious concerns about how it is used and stored.

Instances of AI Speakers Recording Conversations

There are documented instances where AI speakers have inadvertently recorded conversations. The speakers are designed to listen for a “wake word” before they start recording. However, these devices can mistakenly interpret background noise or casual conversations as the “wake word”, leading to unintentional recording.

Such incidents can significantly impact user trust and the reputation of AI speaker manufacturers. The idea that a personal conversation could be recorded and stored without consent is alarming to many users. This fear is not unfounded, as several reports have emerged showing that recordings from these devices have been reviewed by human contractors, a practice that many manufacturers have since vowed to stop or at least offer more transparent options.

Data Handling and Privacy Concerns Related to AI Speakers

Understanding how data is handled by AI speakers is crucial. Companies like Google and Amazon have privacy policies that govern data collection and use. However, these policies can be complex and difficult for the average user to understand.

One major concern is the risk of personal data being used for targeted advertising. This practice, although not explicitly confirmed by major tech companies, is a potential threat given the amount of data collected by these devices. Furthermore, the legal and ethical implications of AI speakers recording conversations without explicit user consent are vast, with privacy advocates calling for more stringent regulations to protect user data.

Sending Recorded Conversations Abroad: A Closer Look

There have been incidents where conversations recorded by AI speakers were sent abroad. A study published in Electronics shows that these recordings are often sent to transcription services or for data analysis. This practice aids in improving the AI’s understanding of language and speech patterns.

However, the potential risks associated with sending these recordings abroad are significant. These include the possibility of data breaches and misuse of information, as well as the likelihood that the data protection laws in the recipient country may not be as stringent as those in the user’s home country.

Preventing Unauthorized Recording and Transmission by AI Speakers

There are steps users can take to prevent their AI speakers from recording and transmitting conversations without their knowledge. This includes disabling the device’s microphone when not in use, regularly deleting stored recordings, and closely reviewing the device’s privacy settings.

Manufacturers also play a critical role in ensuring user privacy and data security. They must be transparent about their data collection practices and provide users with clear instructions on how to control their data. Moreover, there is a pressing need for stringent regulations to govern the use of AI speakers and protect user privacy.

Role of AI Voice Scams in Data Theft

On a related note, AI technology is being used in fraudulent activities. Scammers are leveraging AI to clone voices, a technique that is becoming increasingly common. A report on FOX59 highlights how easy it is for scammers to clone a voice and use it for deceptive purposes.

The rise of AI voice scams has a profound impact on data privacy. It is imperative to stay vigilant and learn how to identify such scams to prevent falling victim. Some measures include verifying the caller’s identity through other means before sharing sensitive information and being skeptical of callers demanding immediate action or payment. The Fidelity Bank has also published a guide on how to protect yourself from AI voice scams.