Google is no longer treating augmented and virtual reality as side projects. It is quietly assembling a full stack of hardware, software, and cloud services that could turn mixed reality from a niche hobby into an everyday computing layer. Instead of chasing a single flashy headset, the company is spreading its bets across Android, wearables, and AI so that glasses, phones, cars, and PCs can all tap into the same immersive platform.

I see a clear strategy emerging: build Android into a mixed reality operating system, pair it with Gemini-class AI, and lean on Google Cloud to stream the heavy graphics work. The result is a roadmap where smart glasses, headsets, and even dashboards share one ecosystem, and where “live AI” becomes as ambient as Wi‑Fi.

From Google Glass to Gemini: why the company is trying again

Google has already lived through one very public failure in this space, and that history is shaping how it approaches mixed reality now. After Google Glass stumbled a decade ago, the company pulled back from consumer headsets and focused on enterprise pilots and behind‑the‑scenes software. That retreat bought time to rethink the product, the privacy model, and the core use cases, instead of simply shrinking Glass into a sleeker frame and hoping the culture had moved on.

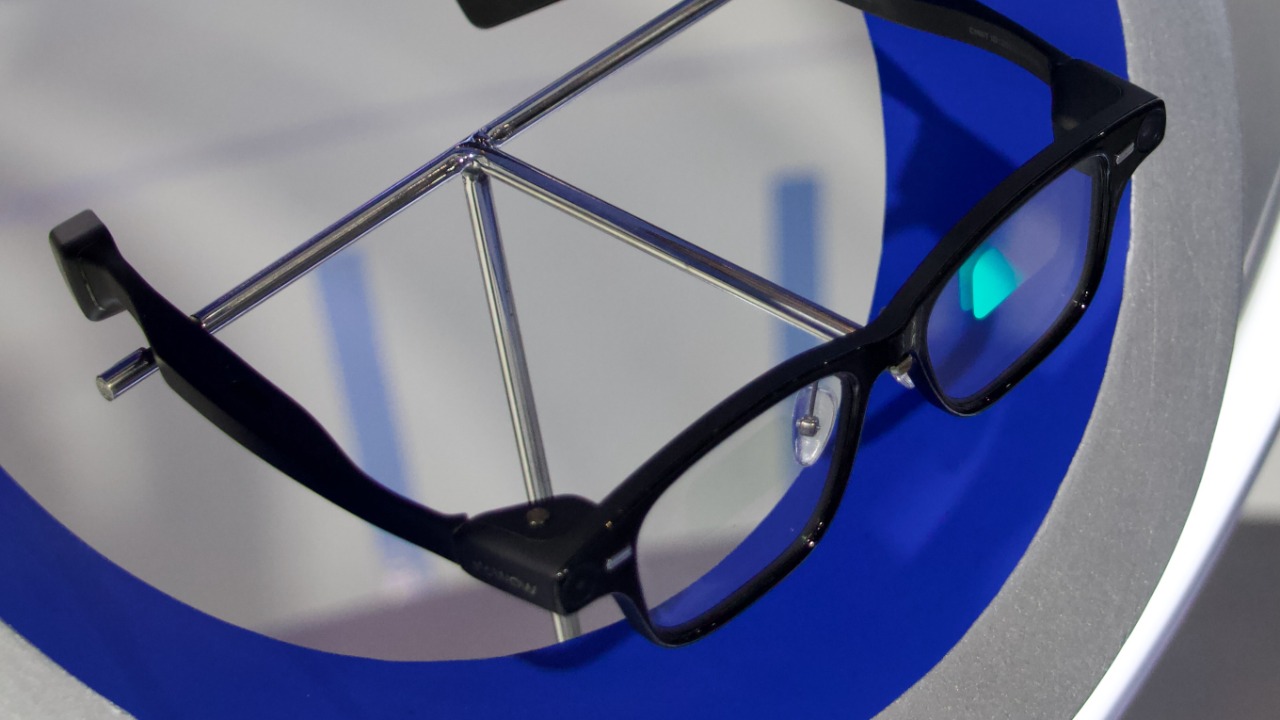

The new push is anchored in Gemini, with Google unveiling plans for its first Gemini AI‑powered glasses and explicitly explaining why Google is returning to smart eyewear after that earlier misstep. Instead of a notification ticker hovering in your peripheral vision, the new glasses are pitched as a context‑aware assistant that can see what you see, translate text, describe scenes, and answer questions in real time. The company is also more explicit about battery life and privacy constraints, lessons that were painfully learned the first time around.

Android XR: turning a phone OS into a spatial platform

The most important piece of Google’s mixed reality plan is not a gadget at all, it is the operating system. With Introducing Android XR, the company is positioning a new platform alongside mobile, TV, and Auto so that extended reality is treated as a first‑class citizen inside Android rather than a bolt‑on experiment. Android XR is designed to let developers extend reality with AI‑powered headsets and glasses, while still leaning on the familiar Android app model and tools.

By putting Android XR on the same footing as Android for phones, TV, and Auto, Google is signaling that mixed reality will share the same ecosystem instead of fragmenting into yet another incompatible platform. That matters for everything from Play Store distribution to how notifications, identity, and payments work when you move from a Pixel phone to a headset or a car. It also gives hardware partners a clear target: build devices that can plug into Android XR and inherit Google’s AI services, rather than inventing their own operating systems from scratch.

Google and Samsung: a joint bet on headsets and “live AI”

Google knows it cannot win the hardware race alone, which is why it has leaned heavily on partners for the first wave of mixed reality devices. Earlier in its reset, Google and Samsung committed to a joint mixed reality headset that looks more like a traditional VR device than a pair of glasses, a deliberate choice to prioritize performance and comfort over fashion. That headset is meant to be a proving ground for Android XR, Gemini, and new interaction models before those features trickle down into lighter wearables.

At the same time the mixed reality headset was announced, the companies also introduced a new Android XR operating system that will power the forthcoming headset and even smart glasses. That shared OS is what lets Samsung and Google experiment with “live AI” features on the bulkier Galaxy XR headset while keeping a path open to slimmer eyewear. It also gives both companies a way to respond to Meta and Apple with a unified platform rather than a patchwork of one‑off devices.

Galaxy XR and the bridge to everyday smart glasses

Right now, the most visible expression of Google’s mixed reality ambitions is not a Google‑branded headset at all, it is Samsung’s. Samsung and Google are leaning on the bulky and not‑very‑glasses‑like Galaxy XR to explore how they can bring “live AI” into people’s field of view. That device is intentionally overbuilt, with room for powerful chips, large batteries, and complex sensors that would never fit into a Ray‑Ban‑style frame today.

Google’s own roadmap makes clear that this is a stepping stone rather than the endgame. The company has already said that Google will Introduce New AI Glasses and a Galaxy XR Update in 2026, with both wearables running on the same platform and designed to balance weight and style. In that framing, Galaxy XR is the development kit that lives on consumers’ faces, a place to test complex ways to control them, while the eventual glasses inherit only the features that prove genuinely useful in daily life.

Gemini AI glasses: from demo to daily assistant

Google’s new Gemini AI‑powered glasses are the clearest sign that the company believes smart eyewear is ready to move beyond prototypes. In demos, the glasses use Gemini to interpret the world in front of you, answer spoken questions, and overlay information without forcing you to pull out a phone. The company has been explicit that After Google Glass, it needed a product that felt less like a camera on your face and more like a personal interpreter and tutor that happens to live in your lenses.

That shift is not just about marketing, it is about the underlying software. Google also outlined several software features to address battery life and privacy concerns, including on‑device processing for sensitive tasks and clearer indicators when the glasses are capturing images or audio. The project has been code‑named Project Aura in some demos, a nod to the idea that the assistant should feel like a subtle halo of information rather than a screen glued to your eye. If the company can keep that aura helpful instead of intrusive, Gemini glasses could become the default way many people access AI.

Cloud streaming and the secret sauce behind life‑like AR

Even the best glasses cannot fake convincing mixed reality without serious graphics power, and that is where Google’s cloud business becomes a strategic weapon. With With Immersive View and Immersive Stream for XR, enterprises can tap into the power of Google Cloud and Map data to render detailed 3D environments and then stream them to relatively lightweight devices. That approach lets a headset or phone act more like a thin client, offloading the heavy lifting to data centers while still delivering life‑like augmented reality.

For Google, this is not just a technical trick, it is a business model. If immersive apps rely on Google Cloud and Map services to function, then every navigation overlay, digital twin, or remote assistance session becomes a recurring cloud workload. It also means that the same mapping and streaming stack that powers Immersive View in Google Maps can be repurposed for industrial training, retail showrooms, or tourism, all without requiring users to buy a gaming‑class PC.

A quiet reset that reshaped Google’s XR priorities

None of this mixed reality push happened in a straight line. Inside the company, augmented reality has been described as “a sensitive topic,” and the internal reset that followed the first wave of glasses forced executives to pick a narrower set of bets. Reporting on that period makes clear that Google and Samsung are still building a joint device more akin to a mixed‑reality headset, while Google declined to comment on plans beyond that. The message was that the company would rather ship one solid platform with partners than scatter its efforts across multiple in‑house gadgets.

That reset also clarified which teams own what. Android is responsible for the XR operating system, Google Cloud handles Immersive Stream and enterprise tools, and the hardware group focuses on reference designs like Gemini glasses. The result is a more modular strategy that lets Google plug into partner devices like Galaxy XR while still keeping control of the software stack. It is a far cry from the early Glass era, when the company tried to own the entire experience and ended up alienating both users and regulators.

Laying the groundwork across wearables, phones, and PCs

What makes Google’s current approach feel different is how many surfaces it touches at once. The company is not just building a headset, it is weaving mixed reality hooks into Wear OS watches, Android phones, Chromebooks, and even Windows PCs. In one recent overview, the author admitted that “Now I will fully admit this is a lot and it took me a bit to process everything,” before concluding that the breadth of initiatives only makes sense when you see them as a foundation for a future where XR is ambient. That reflection came after walking through how Dec, Now, and But all fit into Google’s sprawling roadmap.

In practical terms, that foundation looks like tighter integration between Android XR and existing devices. Phones can act as controllers or secondary displays for headsets, PCs can mirror a headset’s display, and watches can handle quick interactions when you do not want to raise your hands in mid‑air. By spreading the intelligence across devices, Google reduces the pressure on any single gadget to be perfect on day one. It also makes it easier for users to dip into mixed reality in small ways, like glancing at a navigation arrow on a watch, before committing to full‑time glasses.

Why smart glasses still have a lot to prove

For all the momentum, smart glasses remain a risky bet. Even sympathetic observers point out that Dec is a reminder that these devices still have a lot to prove, from comfort and style to input methods that do not feel awkward in public. The fact that Samsung and Google are leaning on the bulky Galaxy XR to explore complex ways to control them underscores how unsolved the interaction problem remains. Voice, gaze, hand tracking, and subtle gestures all have trade‑offs in noisy, crowded, or privacy‑sensitive environments.

Google’s answer is to treat this as a long game. The company has already said that it will Introduce New AI Glasses and a Galaxy XR Update in 2026, giving itself multiple hardware cycles to iterate on weight, style, and controls. Both wearables will run on the same platform, which means improvements in one can quickly benefit the other. If that cadence holds, the mixed reality future Google is building will not arrive as a single breakthrough moment, but as a steady accumulation of small, well‑tested steps that eventually make glasses feel as ordinary as a smartphone.

More from MorningOverview