Humanoid robots have been promised for decades, but most still look and move like machines. Ameca changes that, with a face and body language so nuanced that people instinctively treat it less like a gadget and more like a character standing in front of them. I want to unpack what makes Ameca feel so uncannily alive, how it actually works, and why this particular robot has become a touchstone in the debate over where human‑like machines fit into everyday life.

Why Ameca looks so unsettlingly human

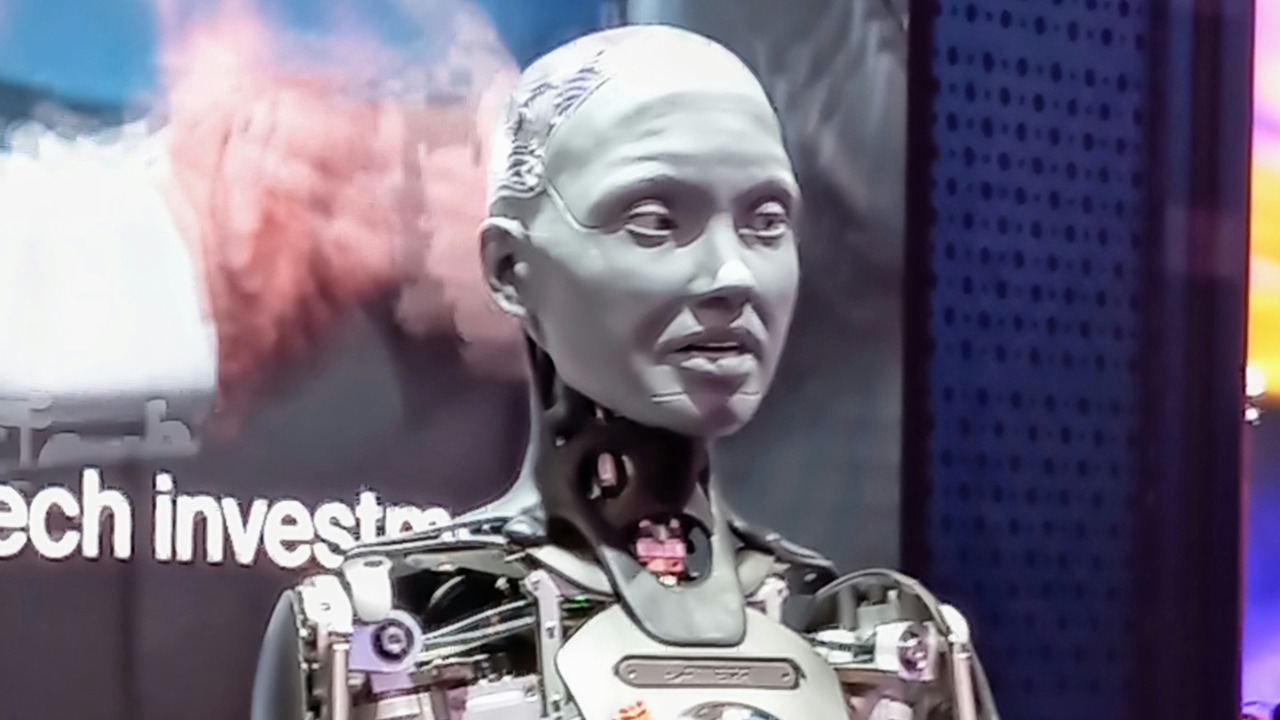

What sets Ameca apart at first glance is not its height or its metal frame, but its face. The robot’s grey, stylized skin and exposed mechanical neck avoid the full “rubber mask” look, yet its eyes track people, its brows knit in confusion, and its mouth curls into a wry half‑smile with a fluidity that is rare in robotics. In live demos, Ameca’s micro‑expressions arrive in quick succession, so a raised eyebrow flows into a look of surprise and then a relaxed grin, which makes the robot feel less like a static prop and more like an actor hitting emotional beats in real time.

Those reactions are not random. The company behind the robot, Engineered Arts, has built Ameca as a modular platform with a highly articulated head, neck, and torso designed specifically for expressive interaction, and its official specification highlights the density of motors in the face and upper body that enable this range of motion. The system is built to support advanced conversational AI and vision systems, so the robot can lock eyes with a person, tilt its head as if listening, then respond with synchronized lip movements that match the generated speech, all coordinated through the platform described on the Ameca product page.

Inside the hardware that powers Ameca’s realism

Underneath the expressive shell, Ameca is a carefully engineered stack of actuators, sensors, and control electronics. The robot’s upper body is packed with motors that drive its shoulders, arms, neck, and facial features, while the lower body is designed to be modular so it can be mounted on a static base or integrated with future leg systems. In practice, that means the robot can gesture with its hands, shrug, lean slightly forward, and track a person’s movement with its head, all while maintaining balance on a pedestal that hides power and data connections.

Engineered Arts presents Ameca as a development platform rather than a one‑off showpiece, emphasizing that its hardware is built to be upgraded as new components and software emerge. In video demonstrations, technicians show how individual modules can be swapped or serviced without dismantling the entire robot, which is crucial for research labs and companies that want to iterate quickly on human‑robot interaction. A detailed walk‑through of the system’s joints, control boards, and safety features appears in a technical overview that pairs close‑up shots of the mechanics with commentary on how the design choices support lifelike motion in one engineering deep dive.

The AI brain: how Ameca talks, listens, and reacts

Ameca’s physical presence would not matter much if the robot could not hold a conversation. What makes encounters with it memorable is the way its speech, gaze, and gestures line up with the flow of dialogue. In public demos, Ameca listens to questions, pauses as if thinking, then responds with natural pacing and intonation, while its eyes flicker and its hands move in sync with the words. That choreography is driven by a combination of speech recognition, large language models, and motion control software that maps conversational cues to specific facial and body animations.

Engineered Arts positions Ameca as “AI agnostic,” meaning the robot can be connected to different conversational engines depending on the customer’s needs, from cloud‑based language models to on‑premise systems tuned for specific industries. In practice, that flexibility shows up in demonstrations where Ameca answers open‑ended questions, cracks simple jokes, or explains its own capabilities, all while maintaining eye contact and expressive timing. A widely shared clip from a trade show floor captures the robot responding to a series of unscripted prompts, with its verbal replies and subtle expressions coordinated through the integrated AI stack highlighted in a live interaction demo.

From CES floors to viral feeds: Ameca’s breakout moment

Ameca’s leap from lab project to cultural reference point happened in front of smartphone cameras. When the robot appeared at major tech events, visitors lined up to ask it questions, film its reactions, and share those clips across social platforms. The combination of a neutral grey face, bright eyes, and eerily human timing made those short videos instantly shareable, and within days, Ameca was being stitched into reaction memes and debated in comment threads about the future of AI and robotics.

One early showcase at a consumer electronics expo captured the mood perfectly, as attendees alternated between delight and discomfort while the robot greeted them with a friendly “hello,” widened its eyes in mock surprise, and followed up with a quip. A widely circulated video from that floor shows Ameca turning its head smoothly toward the camera, raising its eyebrows, and then smiling as it responds to a question about whether it is “amazing or terrifying,” a moment preserved in a viral expo clip.

What “most advanced humanoid” really means

As Ameca’s videos spread, it was quickly labeled one of the most advanced humanoid robots in the world, a phrase that can mean different things depending on what you value. In this case, the claim is less about raw strength or speed and more about the sophistication of human‑robot interaction. Ameca is not designed to lift heavy boxes or run across rough terrain; it is built to stand in front of people, read social cues, and respond in ways that feel intuitive. That focus on interaction over locomotion is what makes it a benchmark for lifelike behavior rather than industrial performance.

In a detailed segment that walks through the robot’s capabilities, a technology reporter describes how Ameca’s expressive range, conversational AI integration, and modular design combine to create what they call one of the most advanced humanoid platforms currently available. The piece contrasts Ameca’s nuanced facial control and social presence with other robots that prioritize mobility or manipulation, arguing that this system represents a different frontier in robotics centered on communication and presence, a point underscored in a broadcast feature on humanoid robots.

How children and families are meeting Ameca

For many people, especially younger audiences, Ameca is their first close‑up encounter with a humanoid robot that can look them in the eye and talk back. Educational segments aimed at children introduce the robot in accessible language, focusing on its ability to move its face, wave, and answer questions rather than on the underlying code. Those pieces often frame Ameca as a glimpse of how robots might one day help in schools, museums, or hospitals, while also acknowledging that its lifelike appearance can feel strange at first.

One family‑friendly explainer walks through how Ameca’s eyes track movement, how its mouth shapes words, and how its software lets it respond to simple queries, all while reassuring viewers that the robot is controlled by humans and programmed systems. The segment uses clear visuals and straightforward narration to demystify the technology, presenting Ameca as a tool for learning about engineering and AI rather than a sci‑fi threat, an approach reflected in a children’s news report that introduces a realistic talking robot to a young audience.

Why Ameca triggers the “uncanny valley” debate

Ameca’s realism is also what makes it unsettling for some viewers. The robot sits in the so‑called “uncanny valley,” the psychological zone where something looks and behaves almost, but not quite, human, which can provoke discomfort. Its face is intentionally stylized to avoid a full human likeness, yet the precision of its eye movements and expressions pushes it close enough that people sometimes describe a shiver when it suddenly turns and appears to make direct eye contact. That tension between familiarity and strangeness is part of why clips of Ameca spread so quickly.

Social media threads featuring the robot are filled with comments that swing between admiration and anxiety, with some users marveling at the engineering and others worrying about what such lifelike machines might mean for jobs or privacy. In one widely shared video, a presenter stands next to Ameca and asks viewers whether they find the robot “amazing or terrifying,” capturing both reactions in a single phrase as the robot cycles through a series of expressive looks. That moment, and the flood of responses it generated, is preserved in a short clip that spotlights Ameca’s realism.

Real‑world roles: from research labs to front‑of‑house

Beyond the viral videos, Ameca is already being used in practical settings where human‑robot interaction is the main event. Research institutions deploy the platform to study how people respond to different expressions, tones of voice, and conversational strategies, using the robot as a consistent, programmable “actor” in controlled experiments. Because its face and body can reproduce the same gesture or expression repeatedly, it is well suited for studies that need to isolate specific social cues and measure how subjects react.

Commercial customers, meanwhile, are exploring Ameca as a front‑of‑house presence in venues like science centers, corporate lobbies, and trade show booths. In those roles, the robot can greet visitors, answer frequently asked questions, and provide a memorable photo opportunity that reinforces a brand’s interest in cutting‑edge technology. A video tour of one such deployment shows Ameca welcoming guests, gesturing toward exhibits, and handling basic queries about opening hours and directions, all while maintaining the expressive style that made it famous, as seen in a showcase of Ameca in a public space.

How Ameca compares with other humanoid robots

Ameca does not exist in a vacuum. It is part of a broader wave of humanoid machines that includes robots focused on warehouse work, home assistance, and industrial tasks. Where some of those systems prioritize legged locomotion or heavy‑duty arms, Ameca leans into social presence, which makes direct comparisons tricky. It is less about whether this robot can carry a box up a flight of stairs and more about whether it can hold a nuanced conversation without feeling robotic in the pejorative sense.

Technology commentators who have seen multiple humanoid platforms side by side often point out that Ameca’s facial control and conversational timing put it in a different category from robots that mainly demonstrate balance or agility. In one panel discussion, a host contrasts Ameca’s expressive head and upper body with more utilitarian designs, arguing that the future of humanoids will likely involve specialization rather than a single “do everything” machine. That perspective is echoed in a video segment that juxtaposes Ameca’s lifelike interactions with footage of other robots performing physical tasks, a comparison highlighted in a feature on realistic humanoids.

Public fascination and community reactions

As Ameca’s profile has grown, so has the online community that follows its development. Enthusiasts share new clips, dissect changes in the robot’s behavior, and speculate about upcoming hardware or software upgrades. Some focus on the technical details, trading notes on actuator design or AI integration, while others are drawn to the robot as a character, giving it nicknames and imagining storylines in which it plays a role. That blend of engineering interest and pop‑culture fandom is a sign of how deeply a machine can embed itself in the public imagination once it crosses a certain threshold of lifelike behavior.

Dedicated groups on social platforms collect sightings of Ameca from trade shows, labs, and media appearances, often debating whether each new video shows a genuine advance or just a clever demo. In one such community, members share behind‑the‑scenes photos, discuss the ethics of humanoid robots, and link to fresh footage whenever the robot appears at a new event, creating an informal archive of its evolution that can be seen in a fan discussion thread.

What Ameca signals about the future of human‑robot interaction

Ameca’s rise hints at a future in which robots are judged less on how fast they can run and more on how well they can share a space with people. The robot’s success as a demonstrator shows that expressive faces, natural timing, and conversational competence are not just cosmetic features; they are central to whether humans feel comfortable engaging with machines in public and professional settings. As more organizations experiment with humanoid platforms, the lessons learned from Ameca’s design and deployment are likely to shape how future systems look, move, and speak.

At the same time, the robot’s popularity has sparked serious conversations about ethics, privacy, and labor. When a machine can mimic human expressions so convincingly, questions arise about transparency, consent, and the potential for manipulation in settings like retail or customer service. Technology analysts who have profiled Ameca often end their segments by noting that the robot is both a marvel of engineering and a mirror for our hopes and fears about AI, a duality captured in a long‑form video that follows the robot through interviews, demos, and audience reactions in a comprehensive behind‑the‑scenes look.

Seeing Ameca up close

For anyone who has only encountered Ameca through short clips, seeing the robot in a longer, unedited interaction can shift the perception from novelty to nuance. Extended demonstrations show how it handles follow‑up questions, recovers from misheard prompts, and uses body language to keep a conversation flowing. Those longer sessions also reveal the limits of the current technology, including occasional pauses, misinterpretations, or slightly off‑beat gestures, which are reminders that behind the lifelike exterior is a complex but still fallible machine.

Several in‑depth videos document these longer encounters, following Ameca as it chats with presenters, responds to audience questions, and explains aspects of its own design. In one such piece, the robot discusses its role as a platform for AI research while its hands move in subtle emphasis, offering a more complete picture than a 15‑second viral clip can provide. That fuller view of the robot’s strengths and quirks is captured in a conversational walkthrough that lets viewers watch Ameca operate over an extended period.

More from MorningOverview