Recent analysis indicates a growing concern in cybersecurity: one in five security breaches is now attributed to AI-written code. This marks a significant shift in the landscape of digital threats. Despite these alarming statistics, developers and security leaders maintain a hopeful outlook, believing that AI technology will eventually overcome its current pitfalls. However, experts caution against over-reliance on AI-generated code, urging a more critical approach to its integration in software development.

The Surge in AI-Generated Code Vulnerabilities

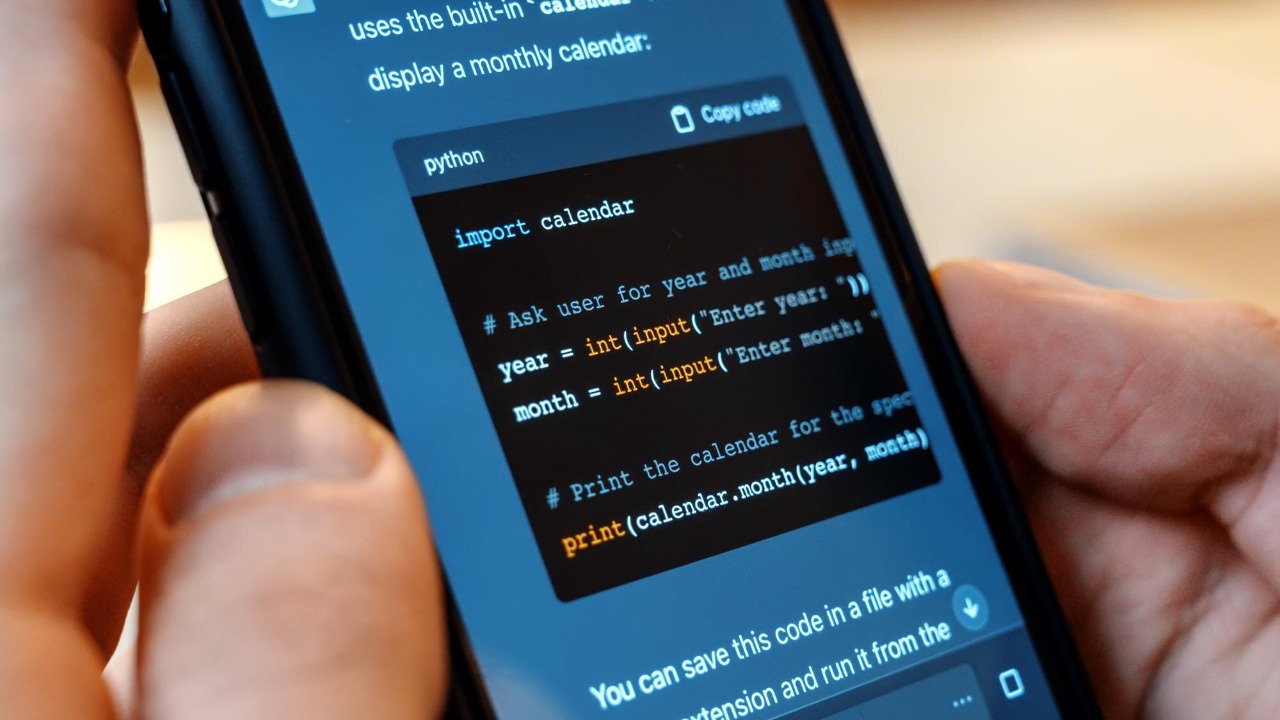

The statistic that one in five security breaches is now thought to be caused by AI-written code highlights a critical vulnerability in modern cybersecurity. AI tools, while efficient, often introduce subtle flaws into production environments. These flaws can range from insecure dependencies to logic gaps that are easily overlooked during the development process. The rapid pace at which AI can generate code often outstrips traditional review processes, allowing these vulnerabilities to go undetected until a breach occurs. This trend underscores the need for more robust oversight and verification processes in handling AI-generated outputs.

Real-world examples illustrate how these vulnerabilities manifest. For instance, AI-generated code might inadvertently include outdated libraries with known security issues, or it may fail to implement proper authentication protocols, leaving systems exposed to unauthorized access. The challenge lies in the initial detection of these flaws, as the sheer volume and speed of AI code generation can overwhelm existing review mechanisms. This has led to a situation where breaches occur before vulnerabilities are identified, emphasizing the need for enhanced detection tools and methodologies.

Developer and Leader Perspectives on AI Adoption

Despite the risks, there is a prevailing optimism among developers and security leaders regarding AI’s potential. Many believe that the technology will eventually “come good,” offering significant efficiency gains in software development. This confidence is reflected in the widespread adoption of AI tools for routine coding tasks, even as the risk of breaches tied to AI-generated code remains a concern. Developers are increasingly relying on AI to automate repetitive tasks, freeing up time for more complex problem-solving activities.

However, this optimism is tempered by the recognition of existing training gaps. Developers must be equipped to manually verify AI outputs to mitigate the risk of breaches. This involves not only understanding the limitations of AI-generated code but also implementing rigorous review processes to catch potential errors. As AI continues to evolve, the need for comprehensive training programs becomes more pressing, ensuring that developers can effectively integrate AI tools while maintaining security standards.

Key Risks of Trusting AI-Written Code

Warnings from experts urge caution when trusting AI-written code, highlighting the potential for hallucinations or biases in AI outputs that can lead to security flaws. These issues are not merely theoretical; they have real-world implications, such as injection attacks or weak encryption resulting from unvetted AI-generated code. The statistic that one in five security breaches is linked to AI-written code underscores the severity of these risks.

Organizations face significant consequences when these vulnerabilities are exploited. Compliance failures are a major concern, as AI-generated code can evade standard auditing processes, leading to breaches that violate regulatory requirements. This not only exposes organizations to legal liabilities but also damages their reputation and erodes customer trust. As such, it is crucial for companies to implement robust oversight mechanisms to ensure that AI-generated code meets security and compliance standards.

Strategies for Mitigating AI Code Risks

To address the risks associated with AI-generated code, best practices for code review must be established. This includes integrating human oversight into the development process to counter the one-in-five breach rate. By combining AI’s efficiency with human expertise, organizations can better identify and rectify potential vulnerabilities before they lead to breaches. Emerging tools and policies, such as automated scanning tailored for AI outputs, are also being developed to enhance security in development pipelines.

Long-term adaptations are necessary to fully realize the potential of AI in software development. This includes educating developers on the importance of not fully trusting AI-written code, as advised in mid-2025 guidance. By fostering a culture of critical evaluation and continuous learning, organizations can better navigate the challenges posed by AI-generated code. Ultimately, the goal is to harness AI’s capabilities while minimizing its risks, ensuring that technology serves as a tool for innovation rather than a source of vulnerability.

More from MorningOverview