A survey of over 2,000 AI researchers conducted in 2022 revealed a significant shift in trust towards AI systems. Initially, 69% of these experts viewed AI as highly capable, but after hands-on experience, only 37% maintained that level of trust. This growing skepticism among scientists directly engaged with the technology challenges the public narrative of AI’s rapid progress. Frequent encounters with limitations in reliability and transparency underscore how prolonged interaction exposes flaws that hype often overlooks (Futurism).

Initial Hype Surrounding AI Capabilities

The initial optimism surrounding AI capabilities was fueled by media and industry reports portraying AI as transformative. Claims of near-human intelligence in models like GPT-4 contributed to investments exceeding $100 billion in 2023. This enthusiasm was further amplified by endorsements from influential figures such as Elon Musk and Geoffrey Hinton, who highlighted AI’s potential despite internal caveats from developers. Such endorsements created a perception of AI’s infallibility, which was not always aligned with the reality of its capabilities (Futurism).

Promotional materials from companies like OpenAI emphasized breakthroughs without detailing error rates, setting unrealistic expectations for scientific applications. These materials often highlighted AI’s potential to revolutionize industries without adequately addressing the challenges and limitations inherent in the technology. This disconnect between promotional narratives and practical realities contributed to the initial hype surrounding AI capabilities.

Scientists’ Direct Experiences with AI Limitations

AI researchers at institutions such as Stanford and MIT have reported significant limitations in AI systems, particularly in large language models. These systems have been found to generate false data in 20-30% of scientific queries, a phenomenon known as hallucination. This issue highlights the challenges researchers face when relying on AI for accurate data generation and analysis (Futurism).

Case studies have also revealed AI’s struggles with reproducibility tasks. For instance, image recognition tools have misclassified medical scans with error rates of up to 15% in real-world lab settings. Such inaccuracies underscore the limitations of AI in critical applications where precision is paramount. One surveyed scientist expressed frustration, stating, “The more I use it, the more I see it’s a black box,” reflecting the opaque decision-making processes that erode trust in AI systems.

Key Technical Flaws Eroding Trust

Biases in training data have been identified as a significant flaw in AI systems, leading to skewed outputs. Research benchmarks have shown that AI models perpetuate gender stereotypes in 40% of analyzed responses. This bias not only affects the accuracy of AI outputs but also raises ethical concerns about the technology’s impact on societal norms and values (Futurism).

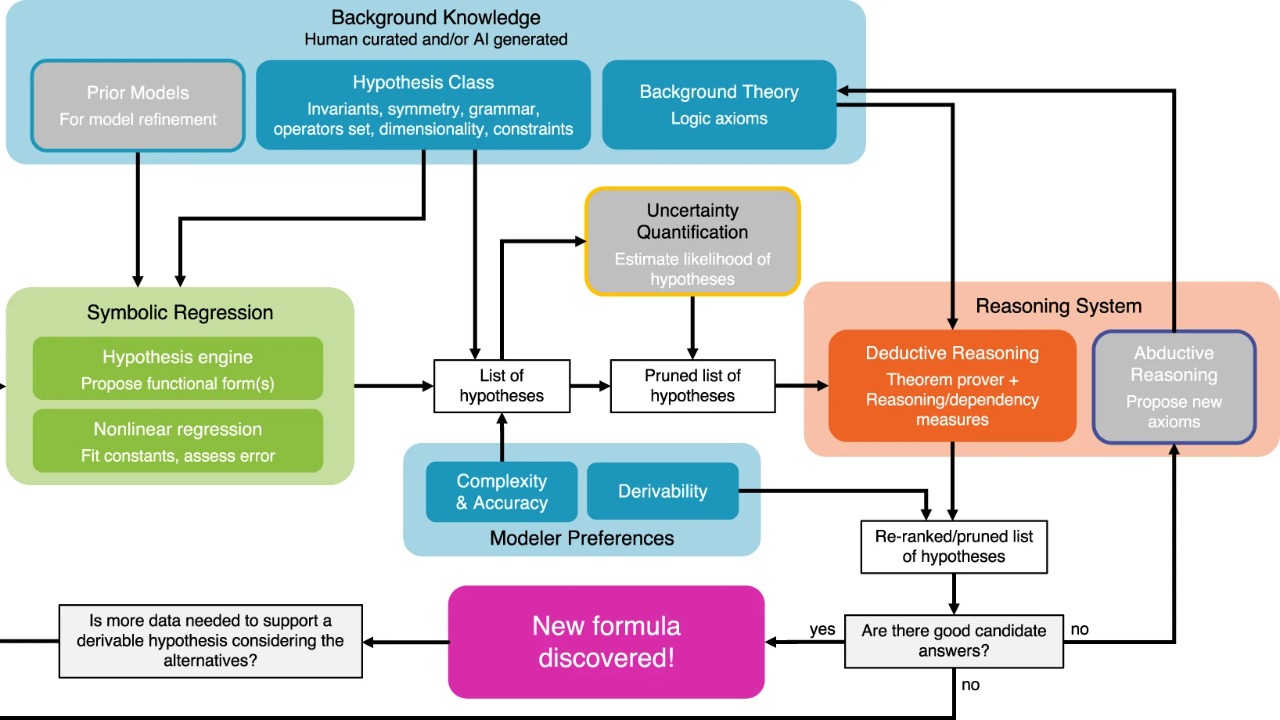

Scalability problems further erode trust in AI, as performance degrades in complex, novel scenarios outside training parameters. Experiments by teams at Google DeepMind have highlighted these challenges, emphasizing the need for AI systems to adapt to new environments without compromising accuracy. Additionally, verification challenges, such as the difficulty in auditing AI-generated hypotheses, have required manual corrections in 60% of cases, complicating the integration of AI into scientific research.

Implications for Scientific Research and Collaboration

The declining trust in AI has significant implications for scientific research and collaboration. Biologists and physicists have reduced AI integration in peer-reviewed studies by 25% since 2021, reflecting a cautious approach to relying on AI for critical research tasks. This trend highlights the need for more robust and transparent AI systems that can reliably support scientific endeavors (Futurism).

Organizations like the AAAI have called for regulatory oversight to establish transparency standards and rebuild confidence in AI systems. These calls emphasize the importance of accountability and ethical considerations in AI development and deployment. Furthermore, the long-term effects on funding are becoming apparent, as grant proposals increasingly demand hybrid human-AI methodologies to mitigate overreliance risks. This shift underscores the need for a balanced approach to integrating AI into scientific research, ensuring that human expertise remains central to the process.