For those grappling with the high costs of Nvidia’s AI accelerators, a new contender has emerged in the form of a 10.8 kW server cluster. This powerful setup, equipped with 32 Intel GPUs and a whopping 768 GB VRAM, is built around the Intel Arc Pro B60, a liquid-cooled single-slot dual-GPU card with 48 GB RAM. This innovative solution, launched by a forward-thinking Chinese GPU vendor, allows for the installation of four such cards in a single system, offering a cost-effective and scalable alternative for AI hardware deployment.

The Rise of Affordable AI Alternatives to Nvidia

The AI market has long been dominated by Nvidia’s high-performance, but costly, AI accelerators. However, the emergence of cost-effective alternatives like the 10.8 kW server cluster is beginning to shift the landscape. This Intel-based solution is gaining traction among users who require high-VRAM setups but are deterred by Nvidia’s premium pricing. The rise of such alternatives is not only democratizing access to AI hardware but also fostering competition and innovation in the market.

Emerging vendors are playing a crucial role in this shift. By integrating Intel’s technology in innovative ways, they are creating affordable and efficient solutions that cater to a wider range of users. This trend is likely to continue as demand for AI hardware grows and more vendors enter the market.

Overview of the 10.8 kW Server Cluster

The 10.8 kW server cluster’s total power draw is a key feature that has implications for data center energy management and scalability. This high-power setup, combined with the cluster’s 32 Intel GPUs, provides a robust platform for handling large-scale AI workloads. The cluster’s high power draw is balanced by its efficient design, which allows for high-density packing of GPUs without compromising on performance.

Another standout feature of the cluster is its VRAM capacity. With a total of 768 GB VRAM, the cluster is well-equipped to handle memory-intensive tasks such as model training. This high VRAM capacity, coupled with the cluster’s powerful GPUs, makes it a formidable contender in the AI hardware market.

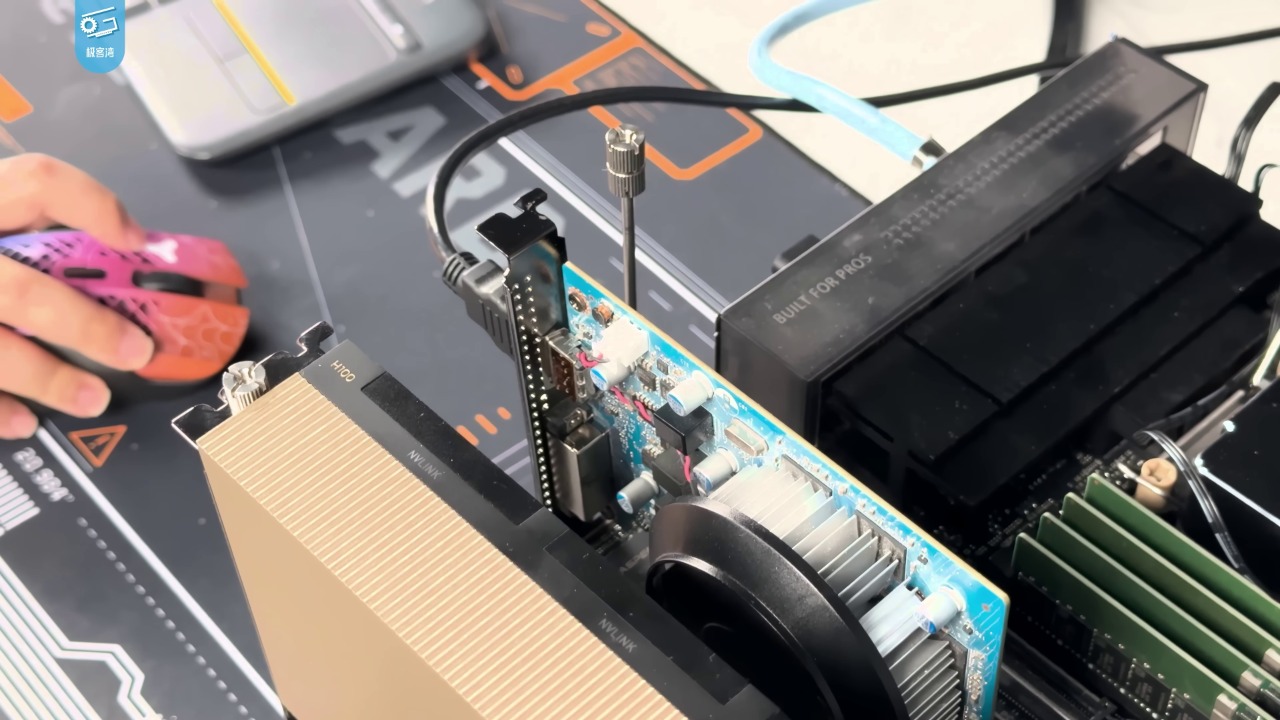

Intel Arc Pro B60: Core GPU Technology

The Intel Arc Pro B60, a dual-GPU card with 48 GB RAM, is at the heart of the cluster’s architecture. Its single-slot design allows for high-density packing in server environments, making it a key component in the cluster’s efficient design. The card’s high RAM capacity also contributes significantly to the cluster’s overall VRAM capacity, enhancing its ability to handle large-scale AI workloads.

Intel’s role in providing the underlying GPU technology is noteworthy, especially in a market dominated by Nvidia. The Intel Arc Pro B60’s performance and affordability demonstrate that Intel-based solutions can be viable alternatives to Nvidia’s AI accelerators.

Liquid Cooling in the Chinese Vendor’s Design

The Intel Arc Pro B60’s liquid-cooled feature, debuted by the ambitious Chinese GPU vendor, is a key factor in the cluster’s thermal efficiency. Liquid cooling is particularly beneficial in high-density setups like the 10.8 kW cluster, as it helps manage the heat generated by the system’s high power draw. This cooling method not only enhances the cluster’s performance but also contributes to its reliability, especially in configurations with 32 GPUs.

Examples of liquid cooling’s impact on reliability can be seen in various AI servers. By effectively managing heat, these servers can maintain high performance levels even under heavy workloads. This is particularly important in AI applications, where maintaining consistent performance is crucial.

Scaling Capabilities with 32 Intel GPUs

The ability to fit four Intel Arc Pro B60 cards into a single system is a key feature of the cluster’s design. This allows the system to scale up to 32 GPUs, providing a high level of computational power. The cluster’s total VRAM of 768 GB is distributed across these GPUs, enabling parallel processing for AI applications.

However, deploying such a high-density setup comes with its own challenges, such as managing interconnectivity in multi-GPU clusters. Despite these challenges, the benefits of such a setup, particularly in terms of cost and performance, make it a compelling option for AI hardware deployment.

Implications for AI Workload Performance

The cluster’s high VRAM capacity plays a crucial role in handling large AI models. With 768 GB VRAM, the cluster offers a cost-effective alternative to Nvidia’s high-cost accelerators. The Intel Arc Pro B60’s contribution of 48 GB per card also enhances the cluster’s performance in training and inference tasks.

While specific benchmarks or use cases where 32 Intel GPUs outperform in cost-per-performance metrics are yet to be established, the cluster’s high VRAM capacity and scalable design suggest that it could deliver competitive performance in a variety of AI applications.

Future Prospects for Intel-Based Clusters

The Chinese vendor’s debut of the liquid-cooled Intel Arc Pro B60 could influence broader adoption of similar 10.8 kW-scale clusters. As more vendors and users recognize the benefits of such setups, we could see a shift towards more Intel-based clusters in the AI hardware market.

Support from the ecosystem, including software optimizations for 768 GB VRAM utilization, will also play a crucial role in the adoption of these clusters. As the market continues to evolve, innovative designs like the single-slot Intel Arc Pro B60 could become increasingly common, providing users with more affordable alternatives to Nvidia’s AI accelerators.