The sphere of artificial intelligence (AI) continues to expand at a rapid pace, touching various sectors and transforming the way we carry out everyday tasks. A remarkable transformation within this sphere is the advent of AI-powered glasses capable of translating sign language. This technology is a game-changer for the deaf and hard of hearing community, fostering better communication and inclusivity.

Understanding AI-Powered Glasses for Sign Language Translation

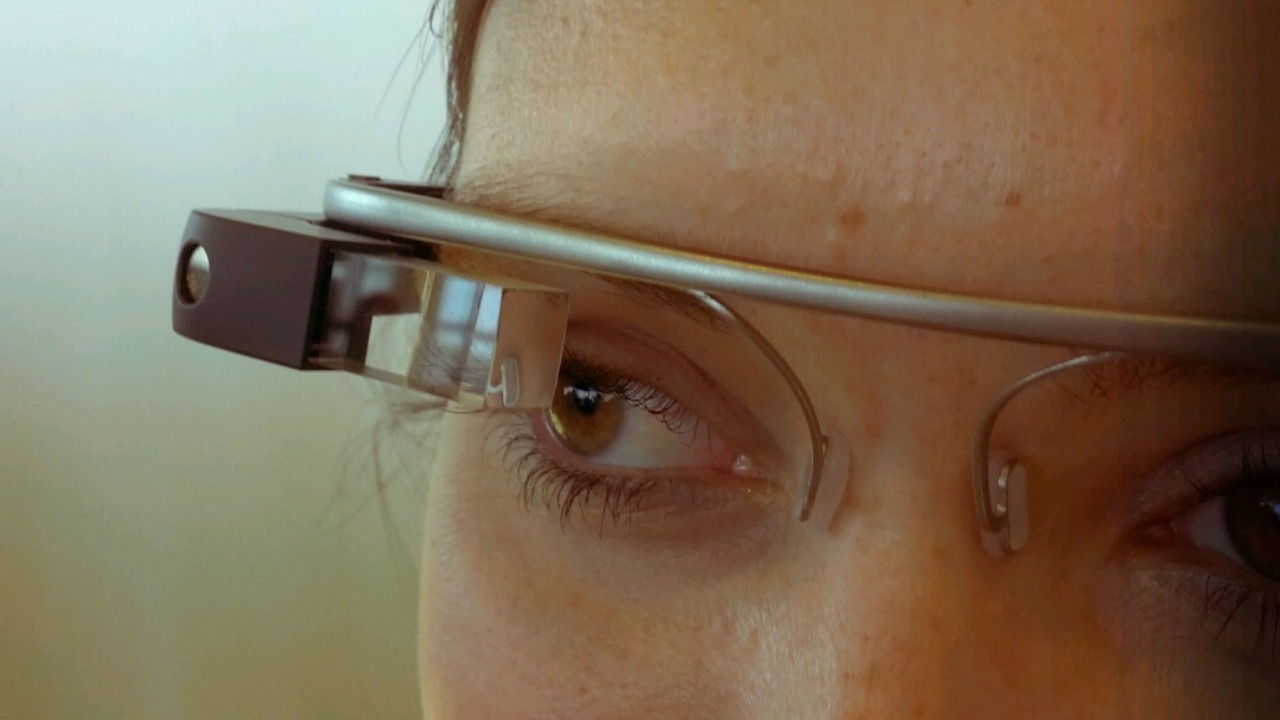

AI-powered glasses for sign language translation are a groundbreaking innovation that aim to bridge the communication gap between the hearing and the deaf community. These glasses function by capturing the hand movements and gestures of sign language users through built-in cameras. The AI algorithms then process these gestures, converting them into spoken or written language. This allows individuals who do not understand sign language to comprehend the communication.

The role of AI in these glasses is pivotal. AI algorithms are designed to learn and adapt to the intricacies of sign language. They are trained using large datasets of sign language examples, allowing them to accurately recognize and translate a wide array of signs. The technology used in these glasses includes cameras, microphones, speakers, and advanced AI algorithms. Together, these components work to provide a seamless sign language translation experience.

The Development and Evolution of AI-Powered Glasses

The development of AI-powered glasses has been a gradual process, driven by technological advancements in AI and machine learning. Companies like XRAI and Meta (formerly Facebook) have been at the forefront of these innovations. These organizations have leveraged the power of AI to revolutionize the way we communicate and interact.

The progression of these glasses has been greatly influenced by advancements in AI and machine learning. These technologies have enabled more accurate gesture recognition and language translation capabilities. The glasses have evolved from simple prototypes to advanced devices capable of real-time translation, thanks to the continuous improvements in AI technology and the dedication of researchers and developers working in this field.

Impacts on Communication and Social Inclusion

AI-powered glasses have had a significant impact on improving communication for the deaf and hard of hearing community. They provide a medium for these individuals to communicate more effectively with people who do not understand sign language. This promotes social inclusion and fosters better understanding and empathy among people. An example of this is a Wired article where a deaf individual was able to communicate with others more effectively using AI-powered glasses.

These glasses are also bridging communication gaps in various social and professional settings. They are being used in schools, workplaces, and public spaces, enabling deaf individuals to participate more actively and independently. This technology is transforming lives, allowing more individuals to express themselves freely and be understood by others.

Challenges and Solutions in AI-Powered Sign Language Translation

Despite the significant strides in this field, there are still challenges in the development and application of AI-powered glasses. These include the complexity of sign language, the need for high-quality cameras to capture gestures accurately, and the limitations of AI algorithms in understanding nuanced expressions. Researchers are continually working to overcome these challenges and improve the technology.

Existing solutions include the use of high-quality cameras and advanced AI algorithms that can learn and adapt to different sign language dialects and styles. Ongoing research, as highlighted in this Proquest dissertation, is focused on improving gesture recognition accuracy and reducing translation errors. The future of this technology depends on continuous innovation and improvements in the field.

The Future of AI-Powered Glasses and Sign Language Translation

The future of AI-powered glasses for sign language translation looks promising. As AI and machine learning technologies continue to advance, we can expect these glasses to become even more accurate and efficient. They may also incorporate additional features, such as real-time voice translation, making them more versatile and useful.

These advancements could have a considerable impact on society. By improving communication and promoting inclusivity, these glasses can help create a more empathetic and understanding world. Furthermore, the technology could potentially revolutionize other sectors beyond communication. For instance, it could be used in healthcare for patient communication, in educational settings for inclusive learning, or in entertainment for immersive experiences. As per a Market.us report, the market for real-time sign language avatars is poised for significant growth, indicating a bright future for AI-powered sign language translation technology.